How do you collect data to measure Core Web Vitals in Site Audit?

Core Web Vitals are a set of metrics that measure the loading performance, interactivity, and visual stability of a website. From May 2021, they became a confirmed ranking factor for Google, so it is important for webmasters to address these areas.

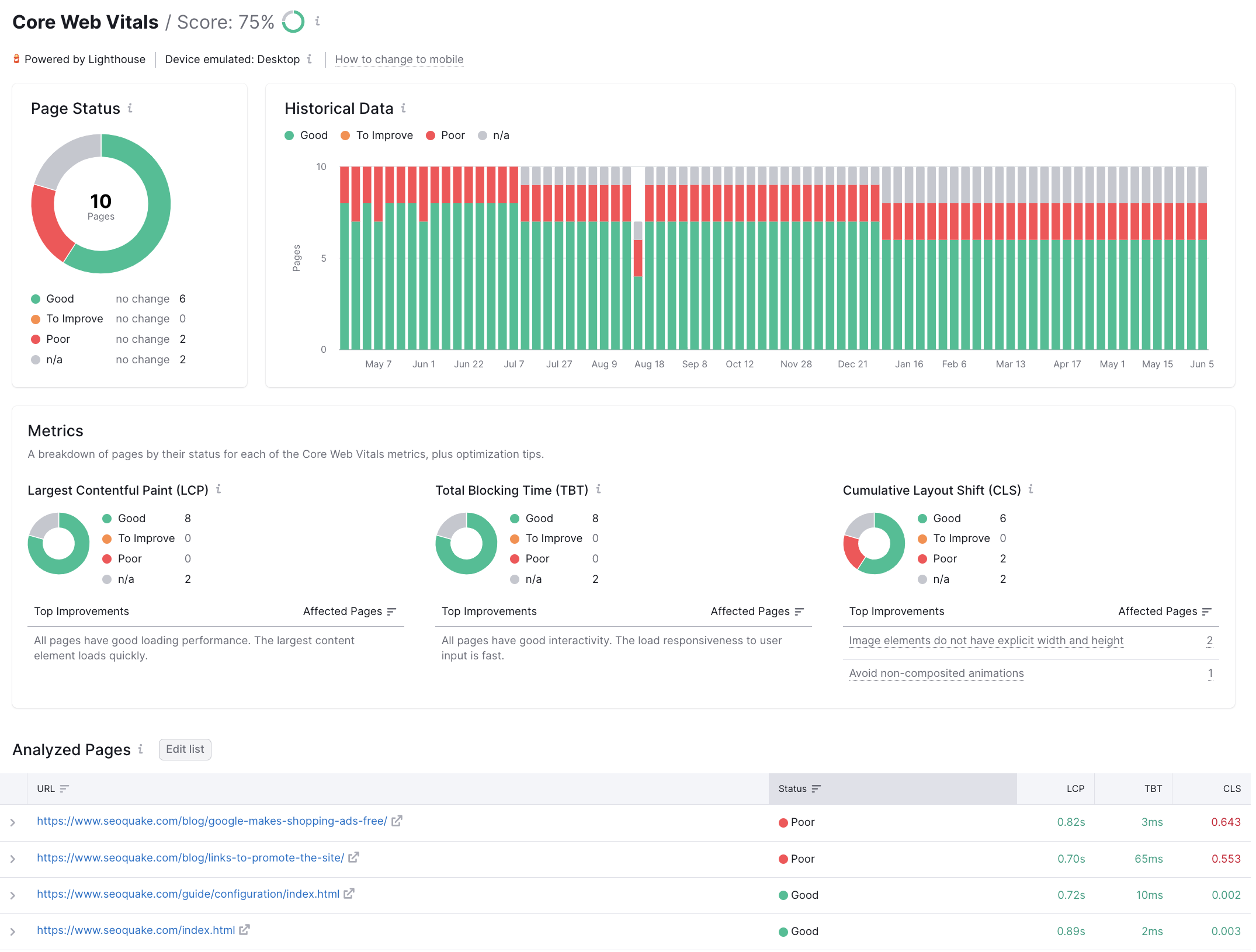

With this in mind, Semrush has incorporated a Core Web Vitals report into the Site Audit tool. Here’s how we collect the data that makes up that report.

Google Lighthouse

The primary tool that we use for data collection is Google Lighthouse, which is an automated, open-source program that can measure Core Web Vitals in a lab environment.

Specifically, it measures Largest Contentful Paint (LCP), which judges the perceived load speed of the page, and Cumulative Layout Shift (CLS), which quantifies the amount of unexpected layout shift in the visible page content.

The third Vital is something called First Input Delay (FID), which quantifies the user experience when the user first interacts with the page. However, this is a user-centric metric that cannot be recreated in a lab environment so, instead, Site Audit uses Total Blocking Time (TBT), which, according to Google, is an excellent lab-based proxy for FID.

Emulation

When emulating both mobile and desktop screenviews, Site Audit uses the standard Lighthouse profile.

Mobile

- Device screen: 360 x 640px

- Network throttling: Slow 4G

- CPU throttling: 4x slowdown (2 vCPUs 2.20 GHz)

- User agent: {based on campaign settings}

Desktop

- Device screen: 1350 х 940px

- Network throttling: 10 Mbps

- CPU throttling: No throttling (2 vCPUs 2.20 GHz)

- User agent: {depends on campaign settings}

Note on Servers

It’s worth noting that our servers are based in the US, so there could possibly be a delay for other countries. In this case, we recommend monitoring the differences between crawls to better understand your progress. Google PageSpeed Insights is a highly recommended tool for this.

- What Issues Can Site Audit Identify?

- How many pages can I crawl in a Site Audit?

- How long does it take to crawl a website? It appears that my audit is stuck.

- How do I audit a subdomain?

- Can I manage the automatic Site Audit re-run schedule?

- Can I set up a custom re-crawl schedule?

- How is Site Health Score calculated in the Site Audit tool?

- How Does Site Audit Select Pages to Analyze for Core Web Vitals?

- How do you collect data to measure Core Web Vitals in Site Audit?

- Why is there a difference between GSC and Semrush Core Web Vitals data?

- Why are only a few of my website’s pages being crawled?

- Why do working pages on my website appear as broken?

- Why can’t I find URLs from the Audit report on my website?

- Why does Semrush say I have duplicate content?

- Why does Semrush say I have an incorrect certificate?

- What are unoptimized anchors and how does Site Audit identify them?

- What do the Structured Data Markup Items in Site Audit Mean?

- Can I stop a current Site Audit crawl?

- How to Disable JS Rendering and Inspect a Page

- Configuring Site Audit

- Troubleshooting Site Audit

- Site Audit Overview Report

- Site Audit Thematic Reports

- Reviewing Your Site Audit Issues

- Site Audit Crawled Pages Report

- Site Audit Statistics

- Compare Crawls and Progress

- Exporting Site Audit Results

- How to Optimize your Site Audit Crawl Speed

- How To Integrate Site Audit with Zapier

- JS Impact Report