SEO is about much more than placing the right keywords into a page’s site copy; there’s plenty of behind-the-scenes factors that you need to take into consideration, too, that can directly impact your ranking potential.

This is where a technical SEO audit comes into play. Without a technical audit, it’s like planning a once-in-a-lifetime cross-country roadtrip without checking that the car was in good working order first. If the car dies ten minutes down the road, it doesn’t matter how much great planning went into that trip; you’re not going to have the experience you thought.

This is no different from SEO. Even with visible gains in website performance, the reality is that there could still be deep problems that impact its indexing by search engines like Google. It doesn’t matter how great your content is or how strong your link portfolio has become if your entire backend is working against you.

In this episode of our SEO Reality Show, we’re going to show how the expert agency conducted a technical audit for Florida-based Edelweiss Bakery, showing our readers how to perform a technical SEO audit step by step.

Missed the first few episodes? Check out the previous content here:

What to Assess During a Technical SEO Audit?

A technical SEO audit is going to look at factors beyond how you’re optimizing your copy with keywords. It will typically include assessing the following:

- The configuration of robots.txt

- The configuration of the main site mirror

- Mobile optimization

- Page rendering correctness

- Site loading speeds

- The presence of broken or duplicate links

Let’s take a look at how the expert agency used technical SEO audit tools to find errors that can be auto-detected and the manual techniques they used to find anything else of concern.

Step 1 — Searching for Errors Using the Semrush Site Audit Tool

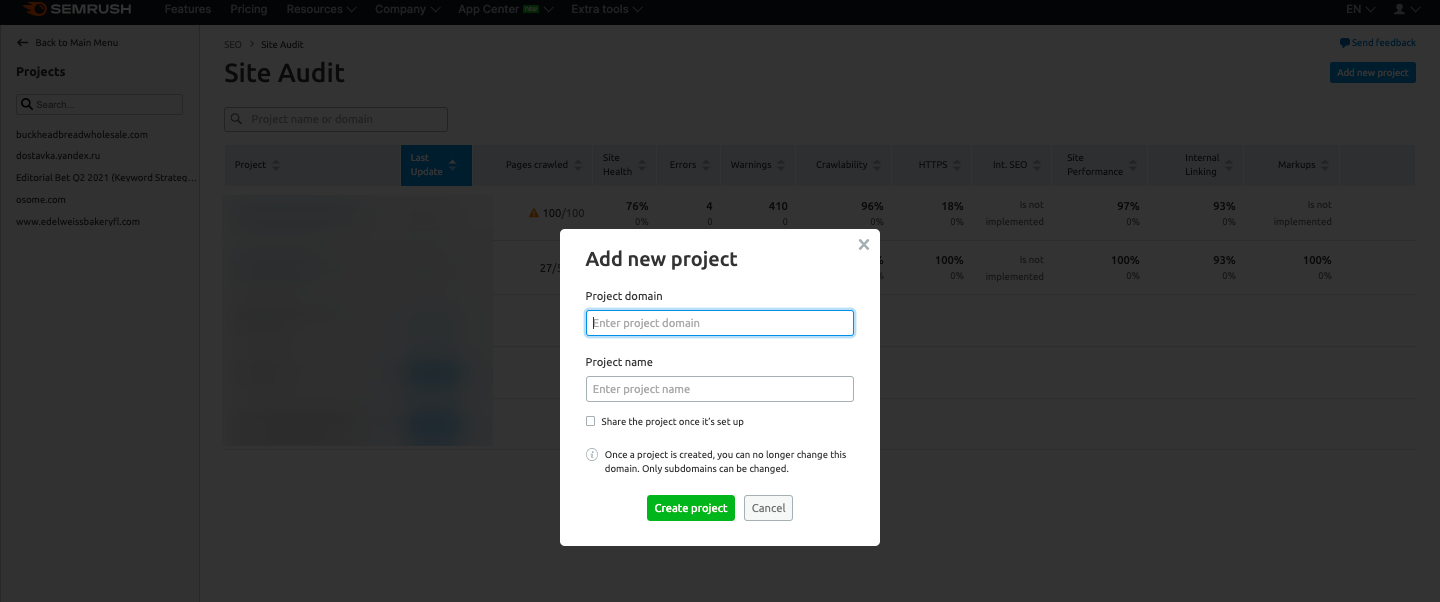

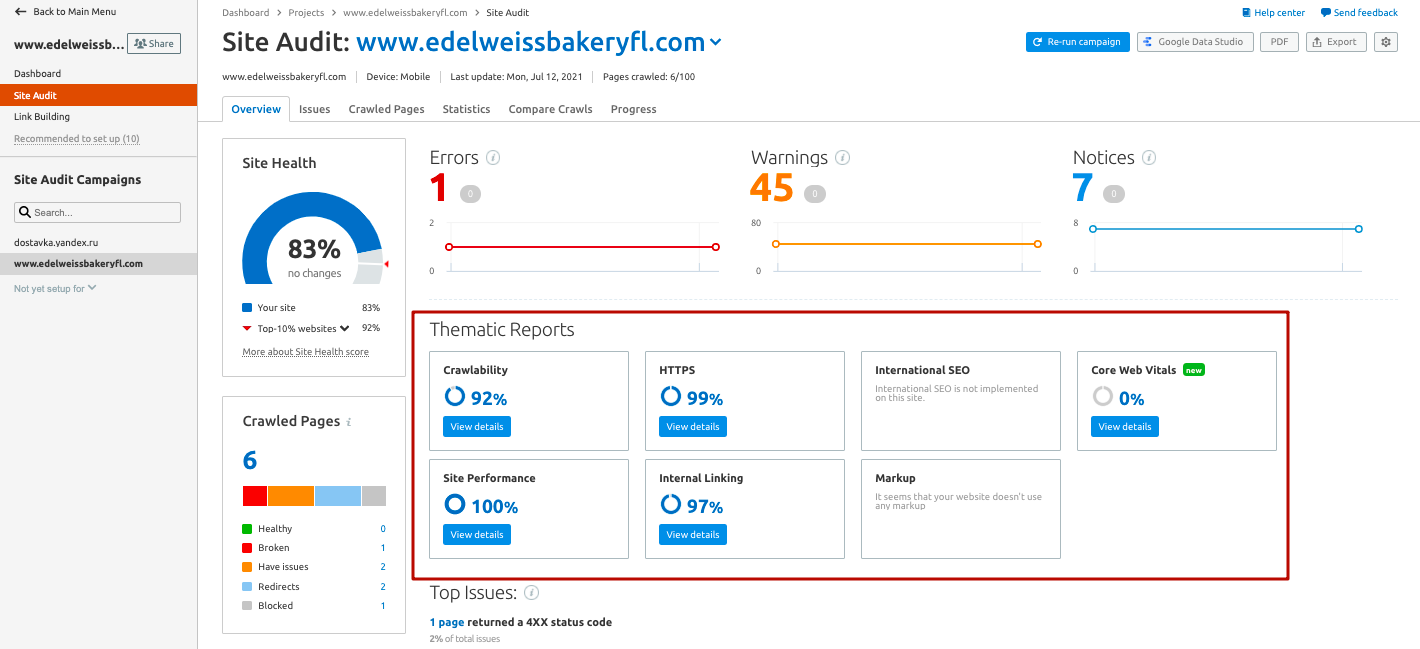

The first thing the agency needed to do was to assess the baseline level of technical optimization. To do this, they used the Site Audit tool from Semrush.

To do this, they created a new project, used the default settings, and ran the audit. You can either adjust the settings here or leave them as is; if you’re not a technical SEO specialist, it’s best to stick to the default options.

1. Critical errors and warnings

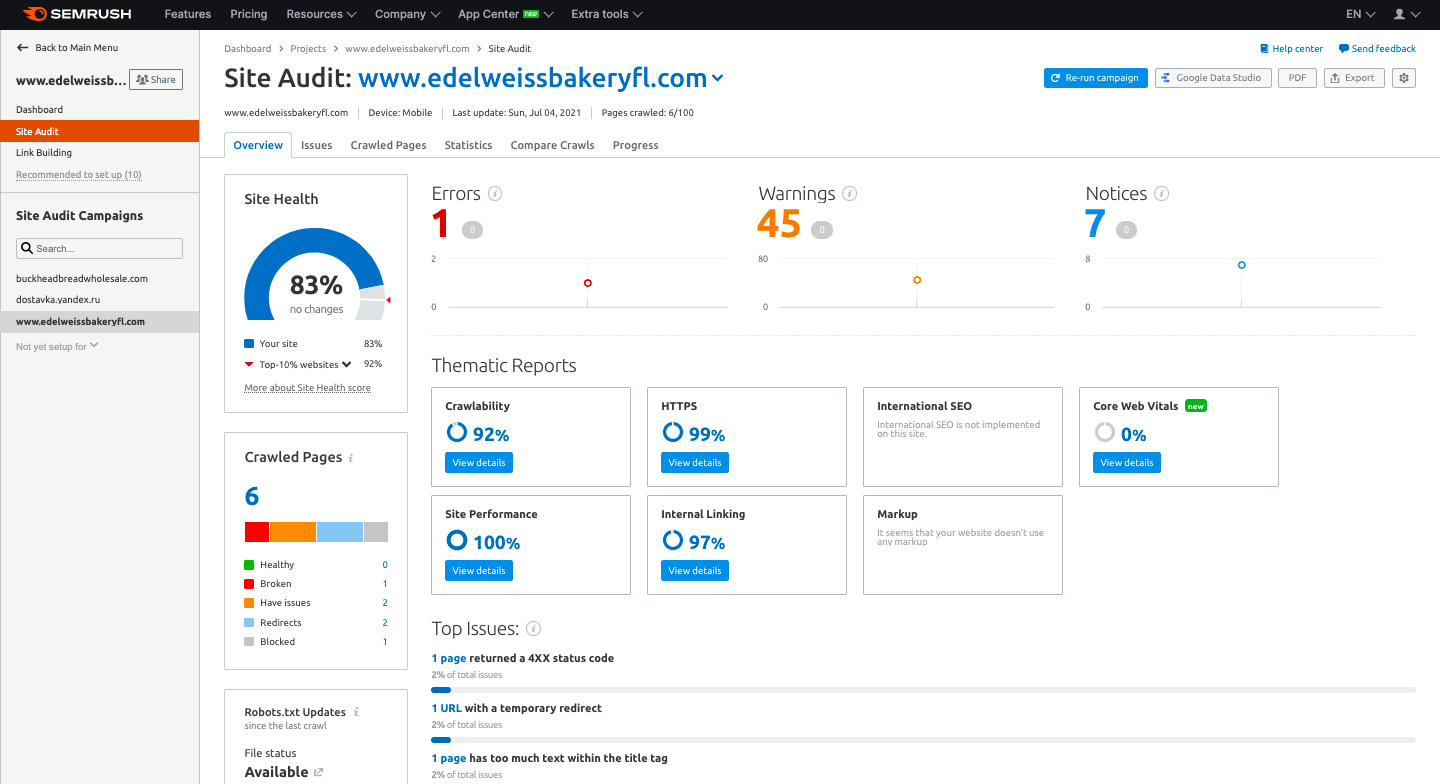

After the audit was conducted, the agency viewed the reports in the Site Audit section of the project.

First, they looked at the Errors section. This section flags any serious problems that need to be addressed right away. In this case, the bakery’s site had one critical error: a broken link to the xml sitemap.

It’s worth noting that a presence of a sitemap isn’t always necessary, though if you have a site with up to 1,000 pages it’s a good thing to have in place. Still, you do want to identify and remove broken links; every time someone clicks on a broken link, the search robot wastes resources and it can prevent users from arriving at the right location. This can lower your site’s ranking position, since Google’s robots aren’t able to crawl it properly.

When checking the site map, the agency came across an interesting usability error worth noting. When going to the address /sitemap.xml (where the xml sitemap is usually located), we saw the design of a non-existent page that left much to be desired. There is always a chance of broken links appearing on your site, and in order for users not to get lost when they get to such a page, it’s best to make an original design for it with the ability to go to the Main page of the site or return to the previous page.

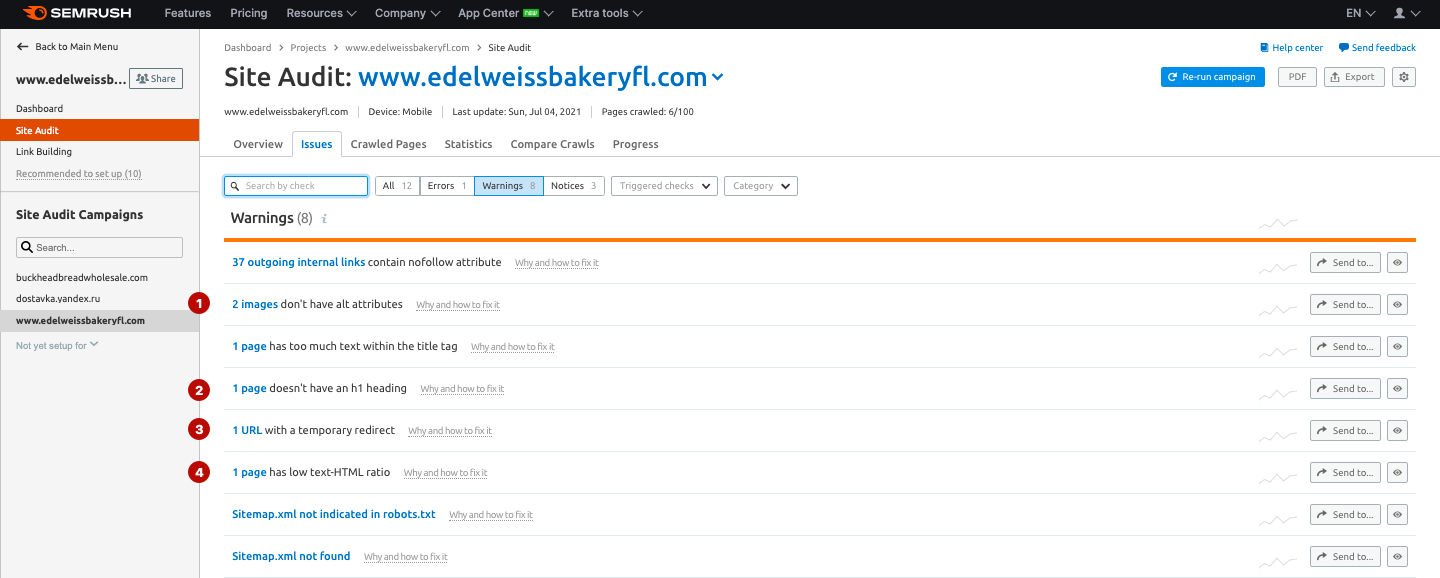

After revealing the 404 page on the bakery’s website, the agency turned their sites to the “Warnings” section in the Issues tab. There were also a total of 45 warnings, which aren’t as serious as the critical errors but that still needed to be addressed.

These were the warnings they felt were most important to adjust:

- Two images without the ALT attribute (which prevents Google from indexing the images and can hurt SEO).

- Absence of the H1 tag on one page.

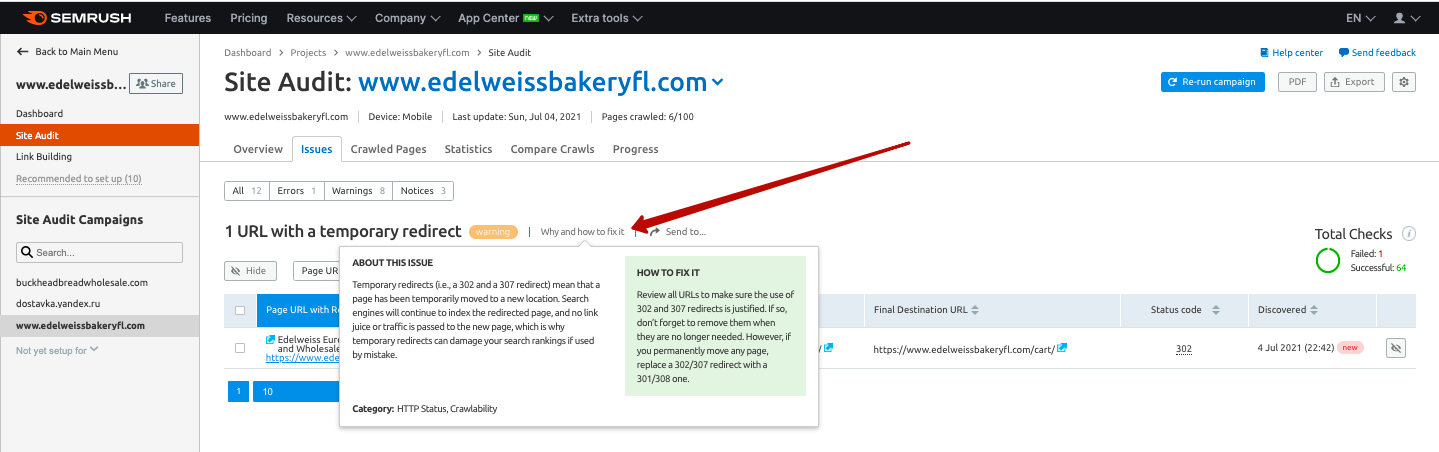

- One page answer with a 302 redirect (which, in this case, should have been a 301 redirect to the final page).

- Not enough copy on a page (critical if you are going to optimize this page for SEO).

When you’re replicating this process for your site, keep in mind that you don’t need to spend an exorbitant amount of time finding out what kind of errors are in play, why they matter, and how to fix them, the Semrush audit tool has your back. Each warning can give quick tips on what’s wrong and how to fix it. Just click on the link “Why and how to fix it” and you will see a tooltip with all the necessary information. Then you can simply send tasks to Trello and Zapier to automate the errors fixing process.

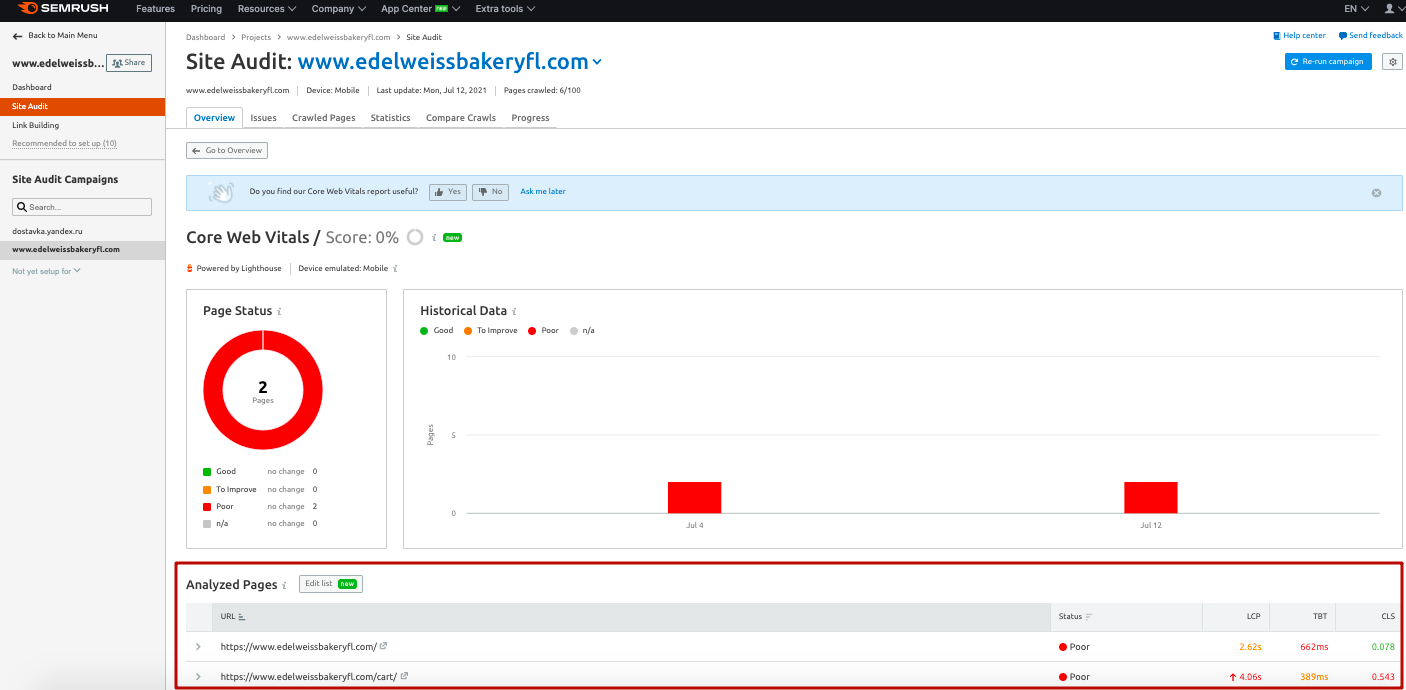

The agency then proceeded to carefully move through all the reports while making notes. They found that poor performance of Core Web Vitals and a lack of markup needed to be addressed.

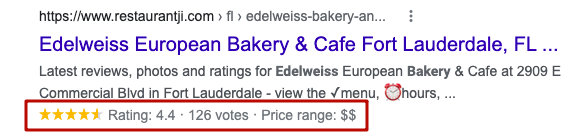

2. Structured Data

While a lack of markup (aka “structured data”) on the site isn’t necessarily a critical error, it’s a big missed opportunity that could be hurting the bakery’s SEO potential. Structured data allows you to display additional information in the search results snippet while also setting your brand apart.

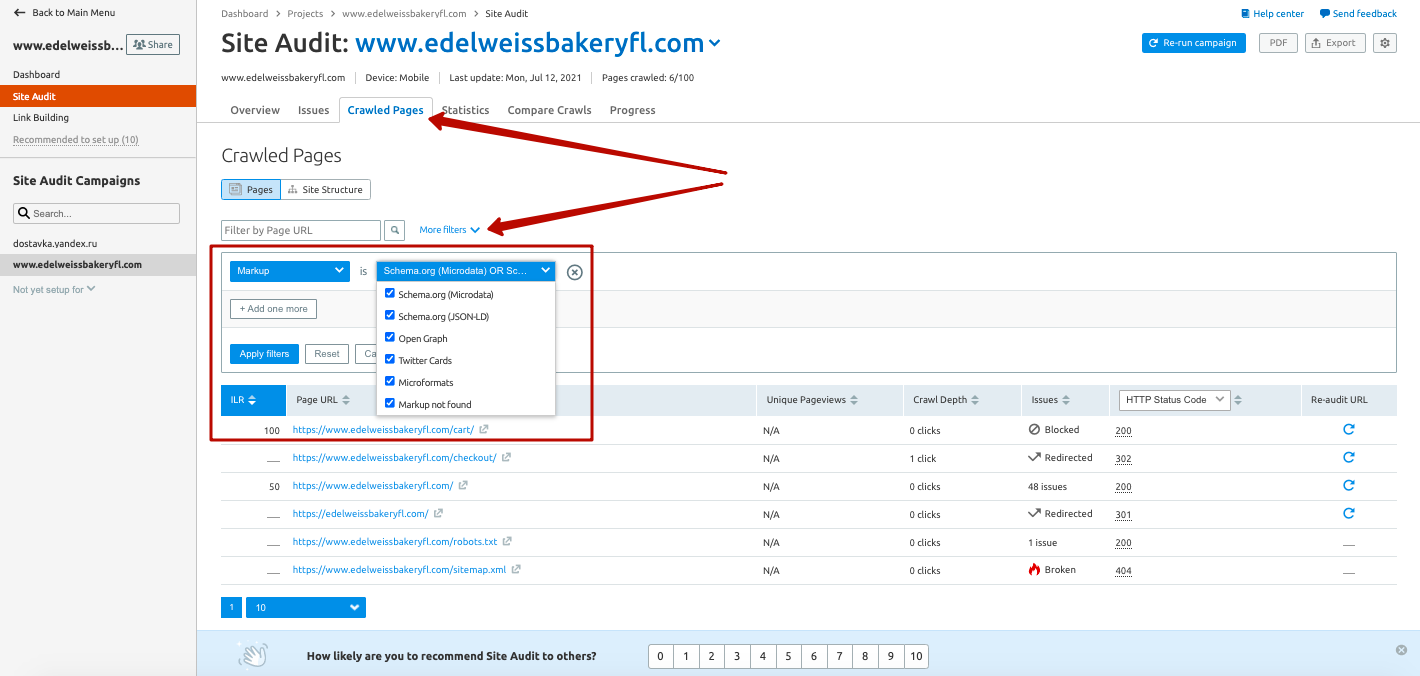

It’s exceptionally easy to check your site’s markup presence using Semrush. Just go to the Crawled Pages tab after you conduct your audit, then select “Markup.” You can filter the results by the types of markup that you’d like to review. In addition to reviewing json-Id markup, you can also check for Open Graph and Twitter Card markup, too.

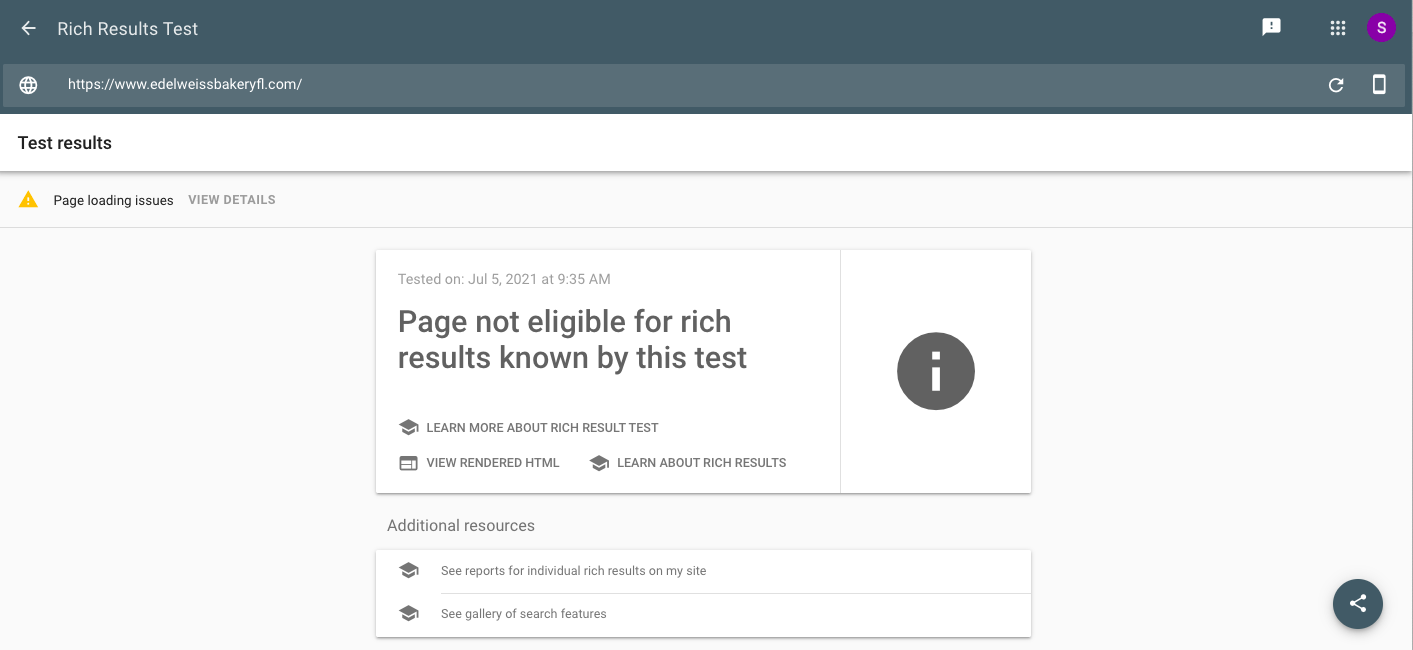

If you need a page-by-page check, you can use Google’s free tool here.

In our case, there is no markup. Types of markup that need to be applied to the main page of the site (and so far we only have it) — Local Business (or Organization) and Restaurant.

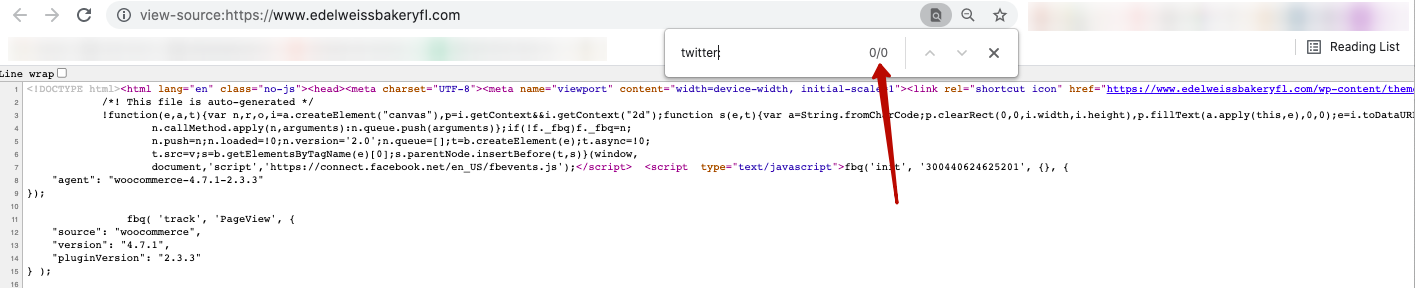

It’s also a good idea to add OpenGraph markup to the pages to get beautiful snippets of text across social media when someone shares the link to your site. Twitter Cards markup can also help with this same purpose on Twitter.

To check the presence of this markup, you can use the markup verification services in Semrush, or use the official validators from Facebook and from Twitter and or look at the source code of your site for the presence of tags for OpenGraph markup. These will appear in the following formats:

- og:title

- og:type

- og:image

- og:url

This is how it will appear for Twitter Card markup:

- twitter:title

- twitter:description

- twitter:creator

- twitter:site

- twitter:title

- twitter:description

In the bakery’s case, both types of markup were missing.

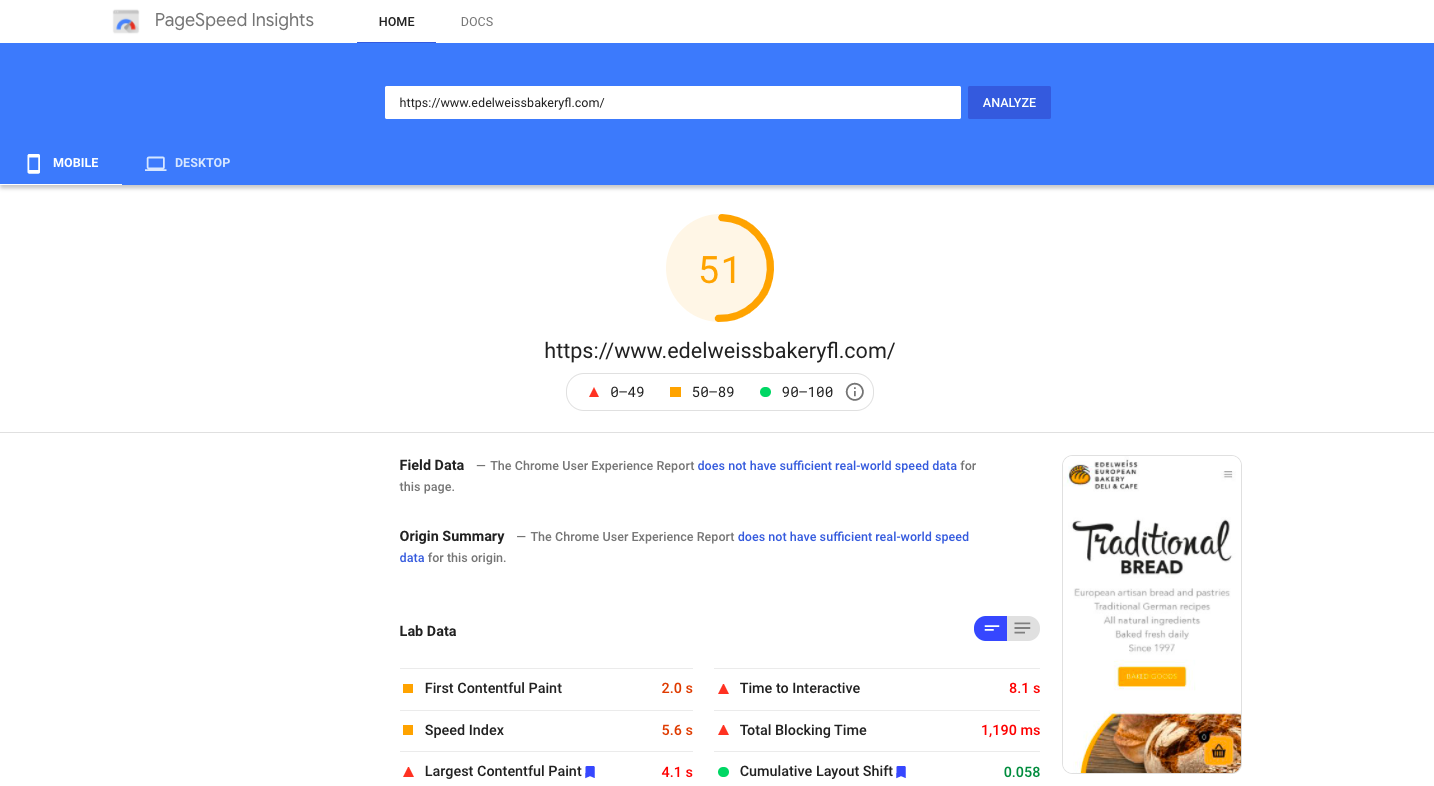

3. Page Load Speed

Page loading speed is an extremely important indicator and contributing factor when it comes to SEO potential.

This is because Google’s search engine engineers are focused entirely on delivering a great user experience. This means that they’ll prioritize high-quality content on reliable sites that deliver strong user experiences. And sites that take too long to load are not compatible with an overwhelmingly positive user experience in this day and age.

The Google team even came up with a name for the download speed and UX score indicators and how these metrics should be measured. If you’re interested, you can read more about Google’s Core Web Vitals (which are different ranking indicators) here.

To see the impact of your site loading speed, you can use Semrush’s Core Web Vitals Report. This will show you how close your indicators are to the recommended values and optimize your site accordingly as needed.

This tool is perfect if you want to look for pages that are weak in loading speed, or pages that have a pattern of a singular issue (like having an error only product cards).

When you want to prepare the list of technical specifications that programmers will use to eliminate problems with site loading speed, however, a more detailed analysis is necessary. Google’s PageSpeed Insights is the tool to use.

Fortunately, the tool is easy to use. Here, the agency entered in the bakery’s domain name and got results quickly, organized with three different labels:

- Green means that things here are working perfectly

- Yellow is okay, but with optimization potential

- Red indicates that there are serious issues that need to be reconciled to improve your loading speed

Step 1 Summary

We just went over a lot of information, so here’s a quick summary of what happened.

During the verification by the Semrush service, the agency identified the following errors on the bakery’s website:

- The presence of a broken link to the xml sitemap

- Two images without the ALT attribute

- No H1 tag on 1 page

- One page gives a 302 redirect

- A small amount of text on the page

- A lack of markup

- Poor performance of Core Web Vitals

This isn’t a bad starting point, but the agency knew that looking for more details with a manual audit would be an important step.

Step 2 — Searching for Additional Errors

In this section, the agency decided to go beyond Semrush’s initial report, manually looking for additional potential issues.

1. Verification of robots.txt

The robots.txt file contains the necessary directives that tell search engines which parts of your site should be crawled and indexed, and which shouldn’t be. It may include:

- System files and folders

- Sections with commercial information

- Payment information

There is, however, no universal rule here about what should be indexed, and the composition of the file itself depends on the CMS that you’re using to run the site.

There is one thing you’ll want to do: Ensure your site is open for crawling and indexing by search robots once it’s past the development stage.

In the case of our bakery, the agency knew that the robots.txt would require adjustments because in their experience WordPress directives (which the bakery used for their site) aren’t specific enough.

2. Mobile Rendering

Mobile site optimization is crucial because so much internet traffic is now coming from mobile. At this stage, the agency checked for mobile site optimization. This included reviewing how well the site worked and how fast it loaded on mobile devices, and how completely the search robot was able to see the site’s mobile pages.

This tool from Google will help you assess how mobile-friendly your site is. It’s a good starting point to see how Google’s search robot sees your mobile site. It may be different from how you see it, which means that Google isn’t crawling it correctly. This can hurt your search potential and (in some cases) the user experience.

The agency found that this exact scenario was the case for the bakery, and knew this was something they’d need to address.

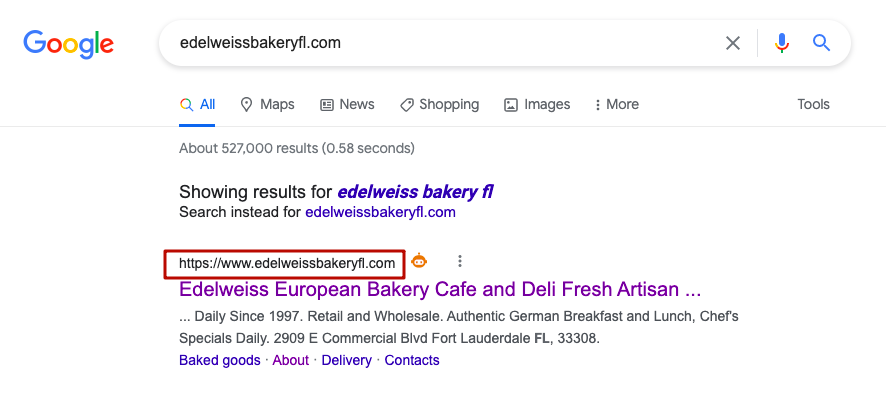

3. Configuring the main mirror of the site

Most sites have “mirrors,” or duplicates. These are often used as archives or even test sites for development. The main mirror of the site is the full domain name of the site that is specified as the main address. It’s considered the “main” site because, unlike the others, it participates in search results. It’s designed to be accessed by users.

It’s essential for the search engine to understand which of the mirrors is the central main site that actually should be served up in search engines. To do this, you need to explicitly specify by “gluing” it together.

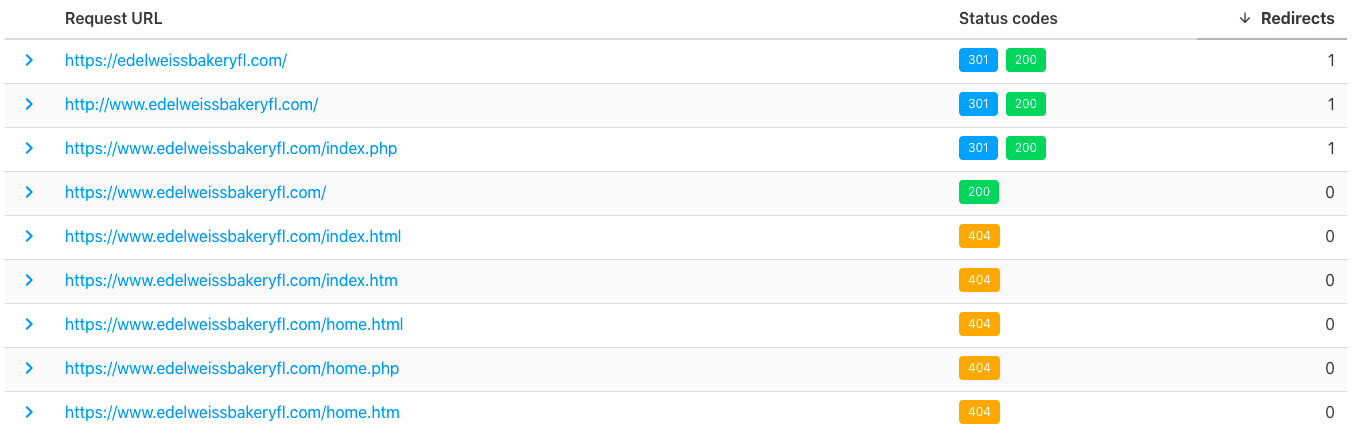

To check to see which of your sites mirrors appear in search, you can use Google Search Console and see what shows up. You can also check it manually by typing the site's domain name into the Google search bar. In our case, the main mirror is https://www.edelweissbakeryfl.com/.

To check the settings of the main mirror, you then need to type in the variations of the domain name of your site and see if there is a 301 redirect to the main mirror. You can check this using this service.

The main combinations that need to be checked are:

- site.com with “www” and without “www”

- site.com with https and http (if you have an SSL or TLS certificate installed)

- site.com/index.html

- site.com/index.php

- site.com/index.htm

- site.com/home.html

- site.com/home.php

- site.com/home.htm

There may be additional combinations depending on your CMS. If the main mirror is not configured, then it is necessary to set the task for the programmer to configure 301 redirects to it. In the bakery’s case, everything is clear here.

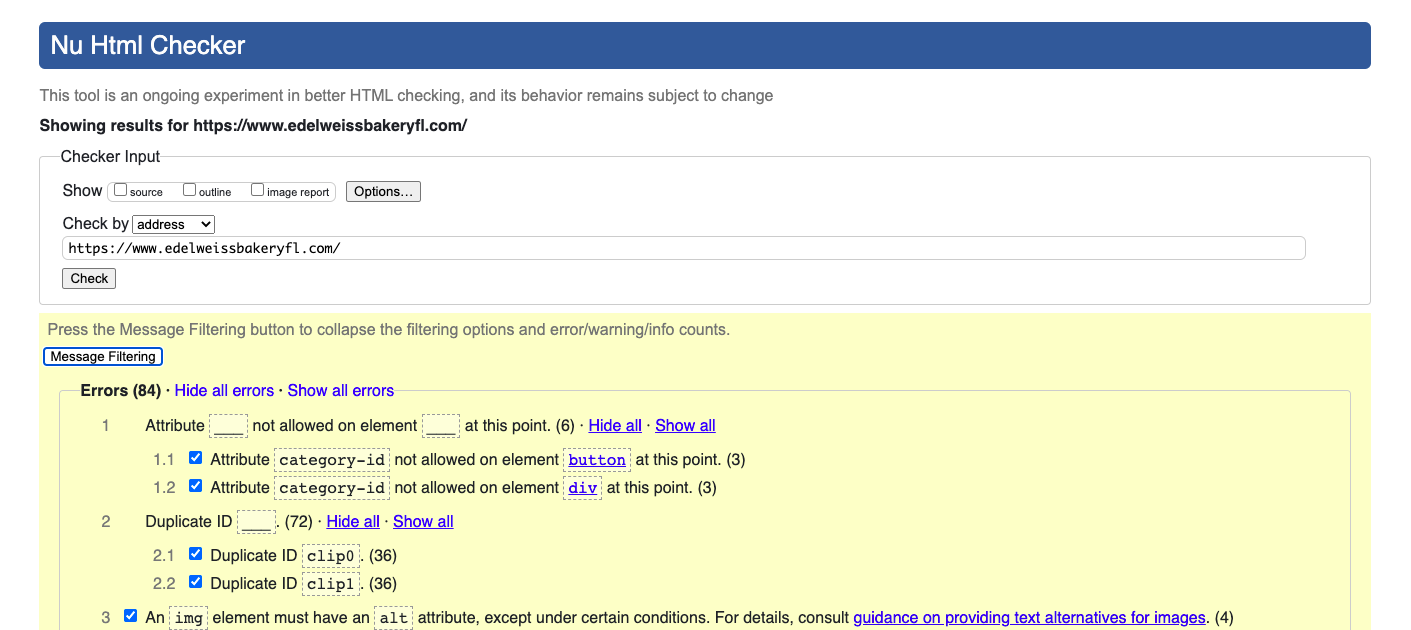

4. Layout errors

Layout errors can significantly complicate how search robots read and interpret the code of your page. They can also impact the display of the site across different browsers or devices. Because of this, it’s a priority to get rid of any layout errors.

The agency used two different validators to check for layout errors on the bakery’s website: HTML code and CSS code. They found out more errors to be fixed.

Summary Step 2

During an additional check, the agency found the following errors:

- Incorrect robots.txt

- Problems with page rendering

- Layout errors

Now the agency can be sure that they have done everything they could, and the elimination of the errors found will definitely benefit the site.

Next Up

After the manual assessment, the technical SEO audit was over! The agency compiled the full list of concerns into a single document for developers, who then went to work optimizing the site. With the technical SEO put into place, the bakery’s site was well on its way to improving its search ranking potential.

Our next episode is going to look at on-site optimization, including the layout of the product page, meta tags, and technical specifications for the copy of the home page. This is one episode you definitely don’t want to miss, so stay tuned!

Get Your Free Technical SEO Template

Infographic: Performing Technical SEO Audit in 10 steps