Whether you're crafting a sales email or landing page, it's hard to predict how customers will react. Even small details like headline length or the color of your call-to-action (CTA) buttons can impact conversion rates.

One way to discover what drives your audience to take action is to conduct A/B testing.

In this guide, we’ll show you how to run an A/B test the right way to get results you can trust. Let’s dive in.

What Is A/B Testing?

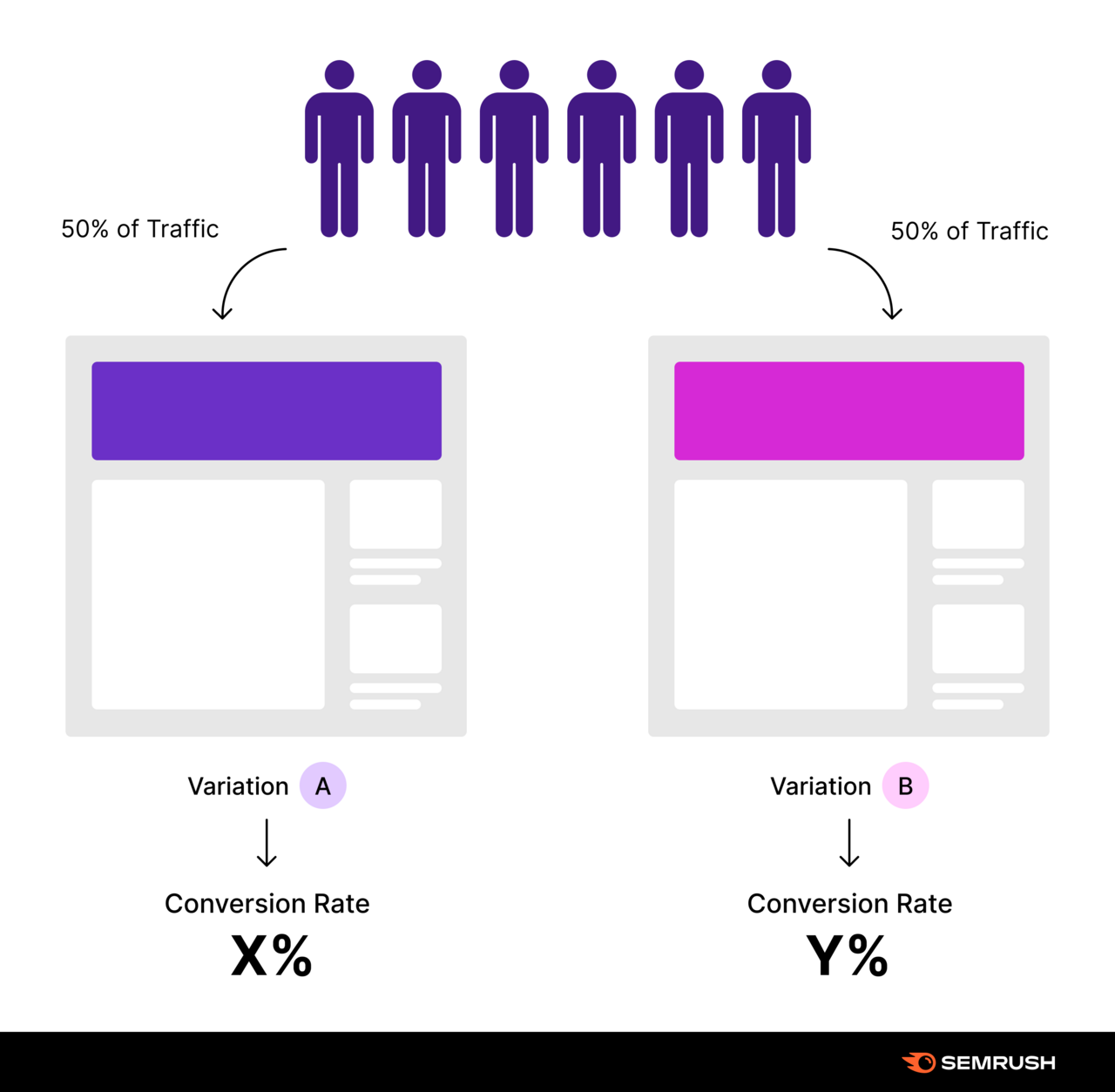

A/B testing, or split-testing, is a research method used in marketing, web development, and user experience (UX) design. Marketing teams compare two versions of a web page, email, or other digital asset to see which one performs better.

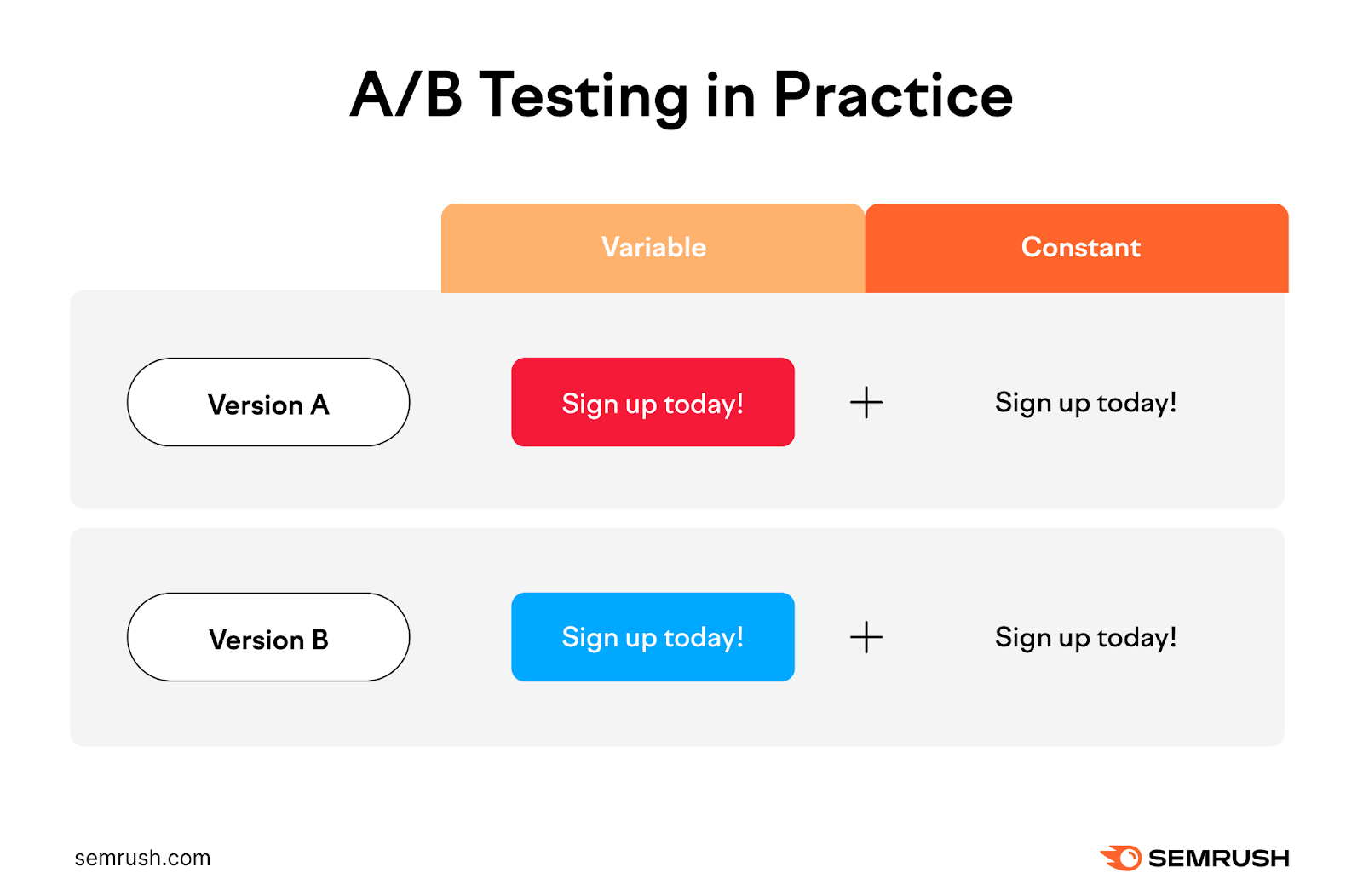

The testing involves selecting two elements—for example, a red call-to-action (CTA) button and a blue CTA button. You show each version to random sample groups within your target audience to measure their impact.

You then compare the control version (A) against a variant (B) to determine which delivers the best outcome. Such as the highest CTR.

In the above example, 50% of users will see a red CTA button, and 50% will see a blue button. The version that gets the most clicks “wins.”

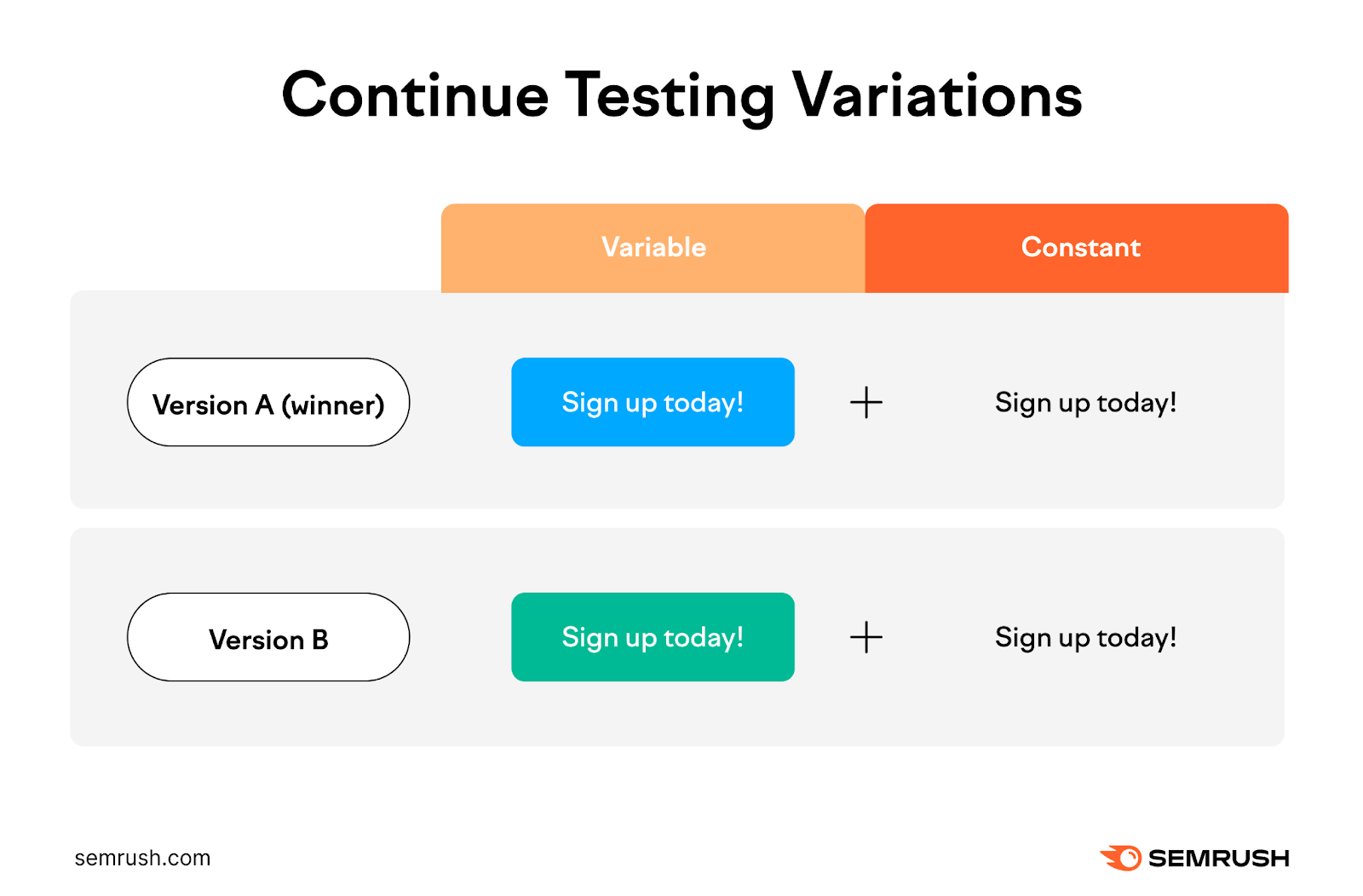

Compare the winning version against a new variant, such as a green CTA button. Repeat until you achieve the best possible results.

Core Components of A/B Testing

Let's take a quick look at the components of an A/B test:

- Goal: Define the metric you want to improve or the problem you're trying to solve. For example, you may want to boost website traffic or reduce your bounce rate.

- Hypothesis: Consider one or two changes that could bring you closer to your goal. This could be something like, "Adding a product video to a landing page will increase sales by 25%–30%."

- Variables: Determine what to test. These could be your page titles, lead images, email subject lines, CTA buttons, or other elements.

- Duration: Decide how long you'll run the test. Make sure you have enough time to gather meaningful data.

- Metrics: Choose appropriate metrics to assess the outcome of your A/B test. These should be closely related to your goal(s). For example, if you want to increase sales, measure the average order volume, conversion rates, sales cycle length, and other metrics.

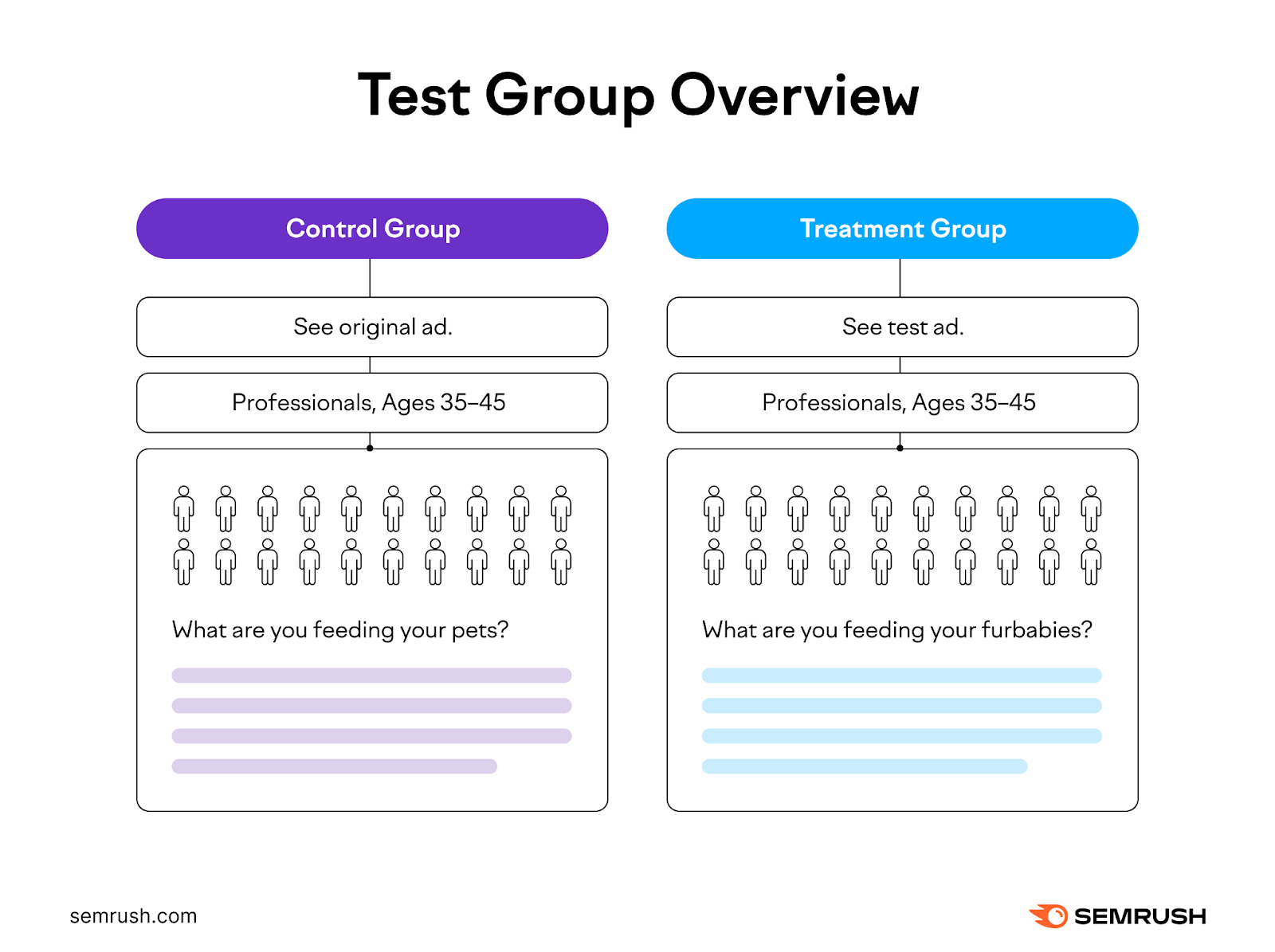

- Control group: Select a random sample of consumers who will only see the original version (A) of your social media post, email copy, or whatever elements you're testing

- Treatment group: Choose a random group with similar characteristics to those of the control group. These users will see the modified version (B) of your social media post or other digital assets.

Why Should You Run A/B Tests?

A/B testing allows you to make data-driven decisions about your site's layout, headlines, social media posts, and other elements. It can also help you meet customers' needs.

For example, you may realize that your audience prefers a particular color scheme or tone of voice. These insights can lead to:

- Lower bounce rates: Test your copy and other elements on your site to determine which ones boost visitor retention and engagement

- Increased conversions: Experiment with different headlines, CTA buttons, product photos, or other variables that impact conversion rates

- Improved customer experience: Tweak your website layout, copy, emails, and social media posts to deliver seamless experiences across all channels

- Time and cost savings: See which of your ads, email campaigns, and social media strategies drive the best return on investment

- Data-driven decision-making: Plan, manage, and optimize your marketing efforts based on hard facts

For instance, use A/B testing to determine the impact of your subject lines on email open rates. Or, test two versions of an online ad. Then, allocate your budget to the version that generates the highest ROI.

How to Run a Basic A/B Test in 7 Steps

A/B testing varies depending on your goals and tools.

For example, running an SEO split test differs from A/B copy testing.

Rather than trying to cover everything, we created a step-by-step guide to help you run a basic A/B test on two pieces of content.

1. Look for Improvement Opportunities

First, look at the data collected from your site, mobile app, ad campaigns, or other digital assets to identify areas for improvement.

These insights may come from:

- Semrush’s On Page SEO Checker

- Semrush’s' Organic Traffic Insights

- Google Analytics (GA4)

- Google Search Console (GSC)

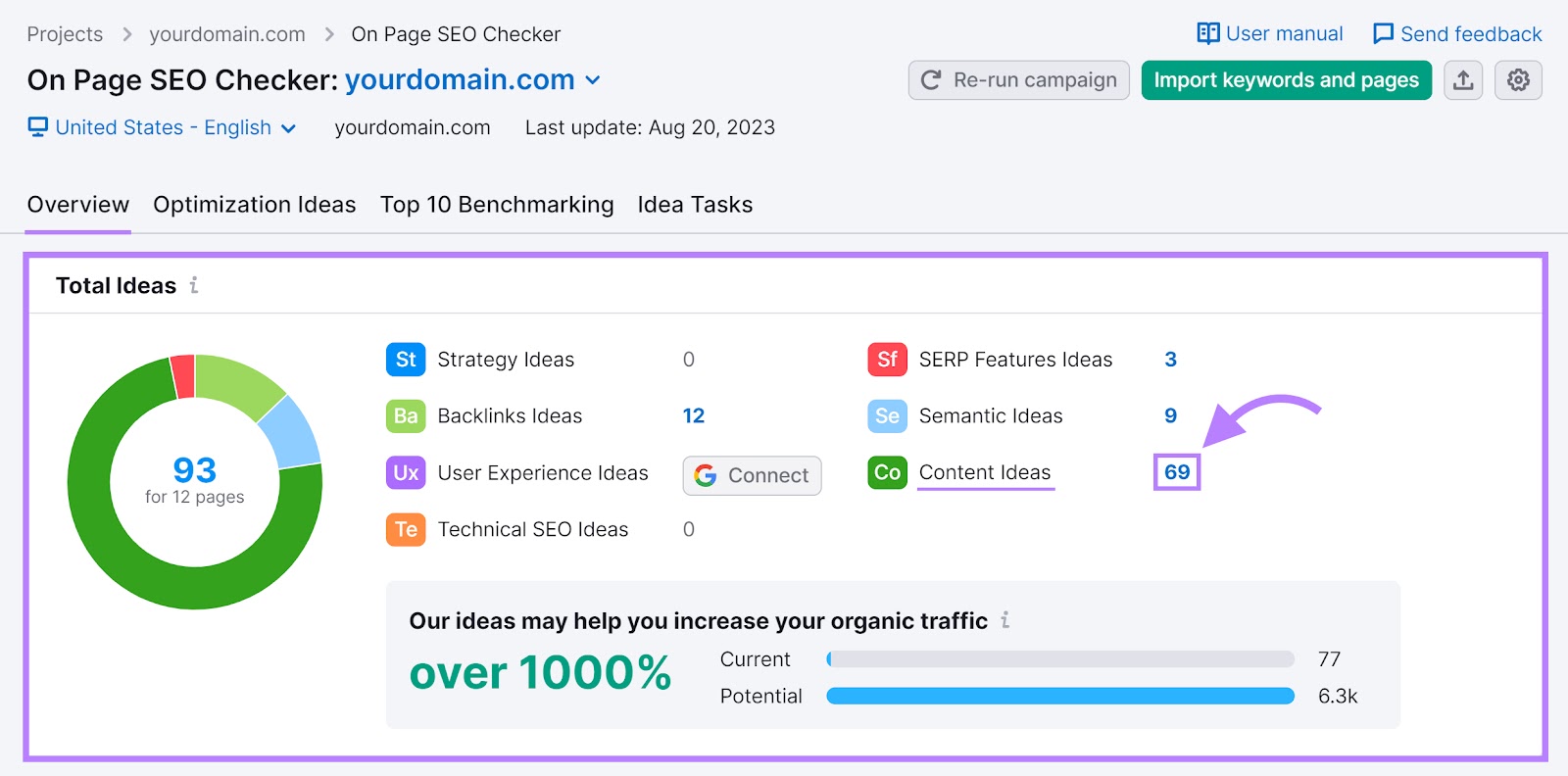

For example, use Semrush's On Page SEO Checker to see which pages to optimize for higher traffic and engagement. The tool will also generate a list of optimization ideas to help you get started.

Here’s how:

Access the On Page SEO Checker from the Semrush dashboard. Next, enter your domain name and click "Get ideas."

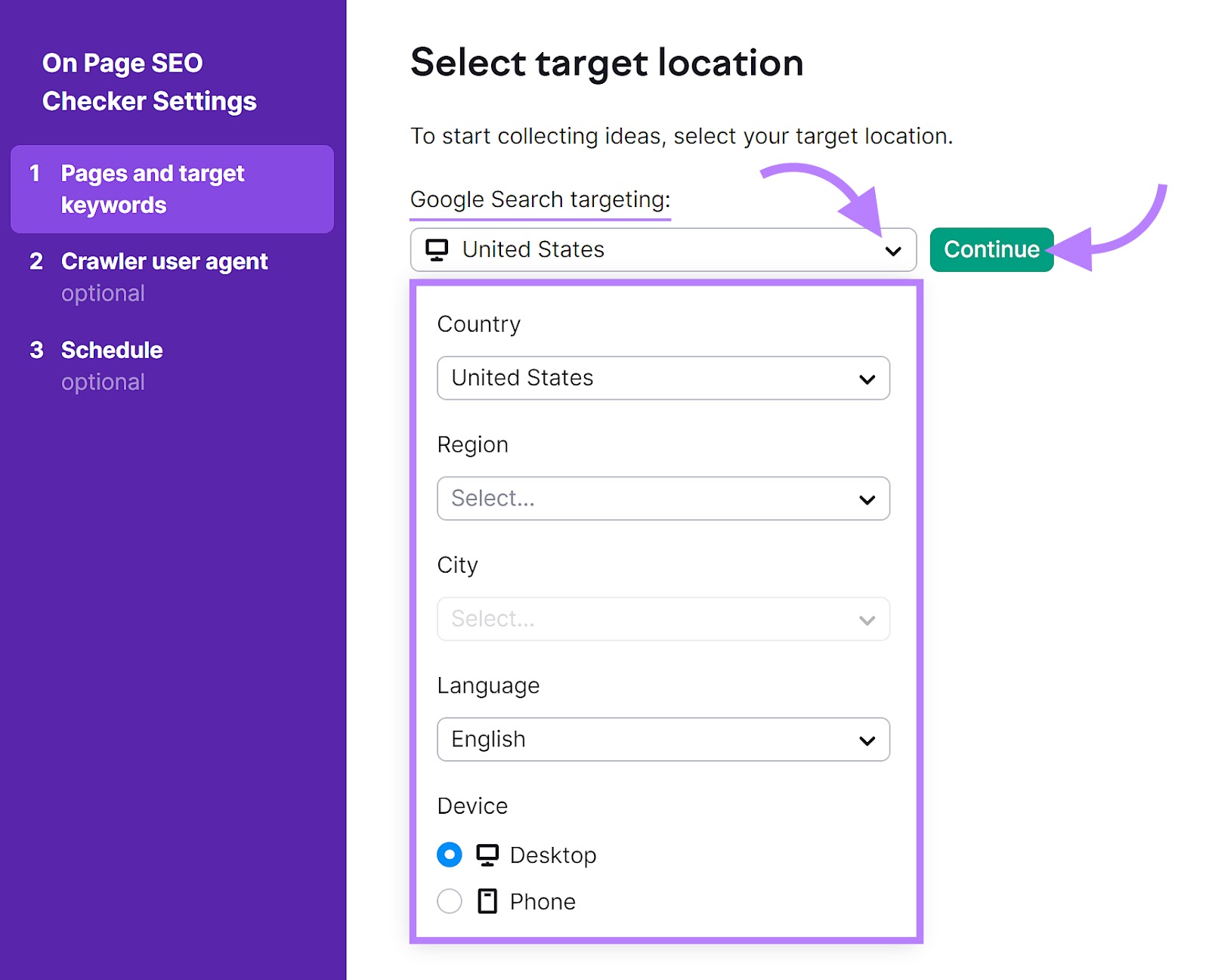

Select your target location, preferred language, and device type from the drop-down menu under "Google Search targeting." When you're done, click "Continue."

Semrush will open a pop-up window displaying your web pages. From here, add or remove pages. Or, connect your GSC account for further insights.

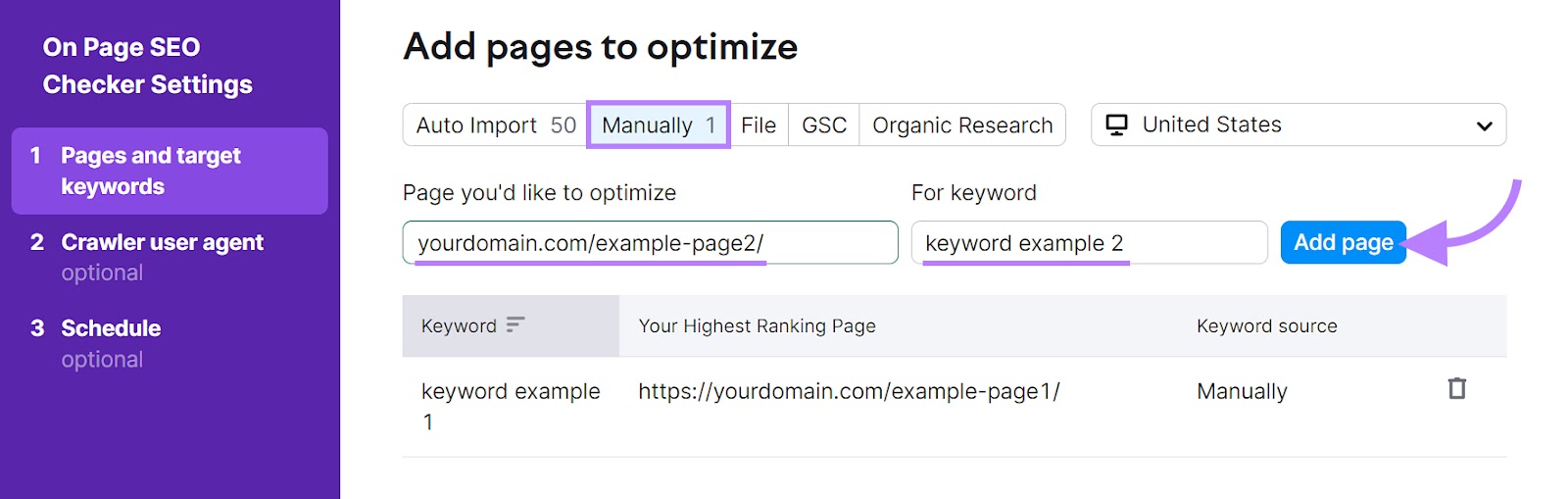

To add a new page, click “Manually.” Enter the URL and the keyword you want to optimize it for, then click “Add page.” Repeat this step for each page you want to add.

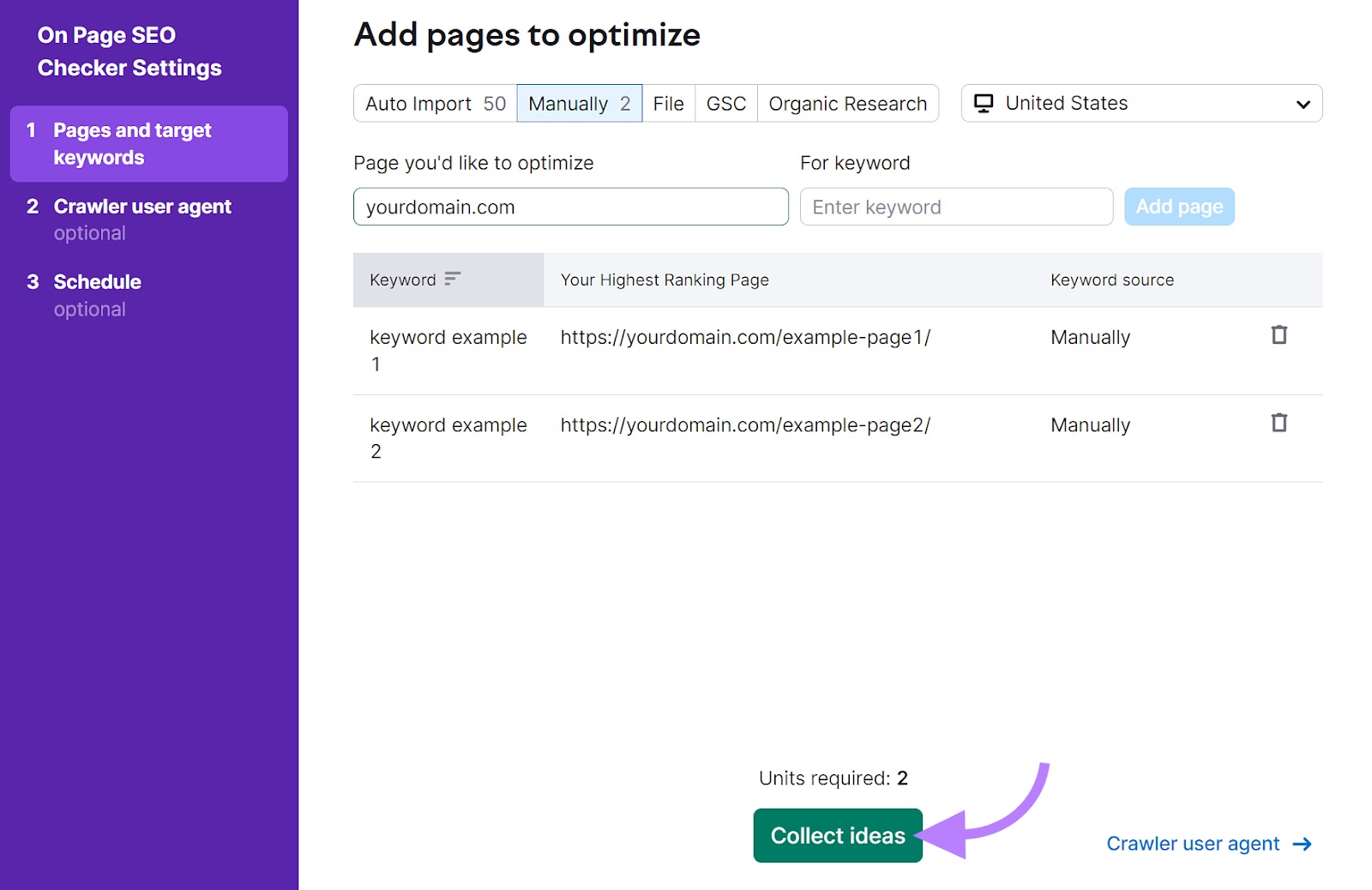

Once your pages are added, click "Collect ideas."

The On Page SEO Checker will scan your pages and make recommendations for improvement.

For instance, it may suggest adding more content, building links, or fixing technical issues that hurt your SEO performance.

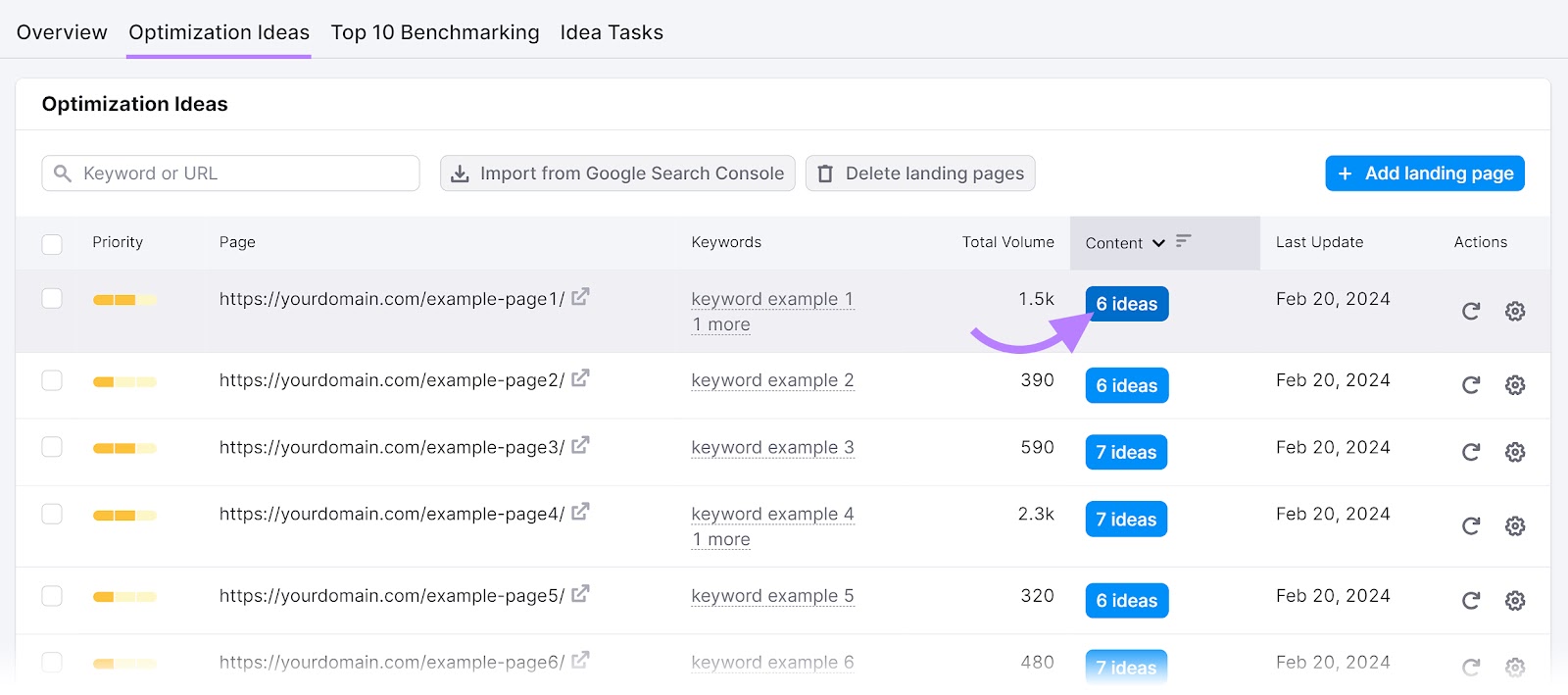

To see these recommendations, click the “# ideas” button next to the page you want to optimize.

However, for A/B testing purposes, we'll focus on website copy. Go to "Content Ideas" in the “Total Ideas” section and click the blue number next to it.

The tool will display the pages that require your attention. Click the "#ideas" button next to each page URL to see our recommendations.

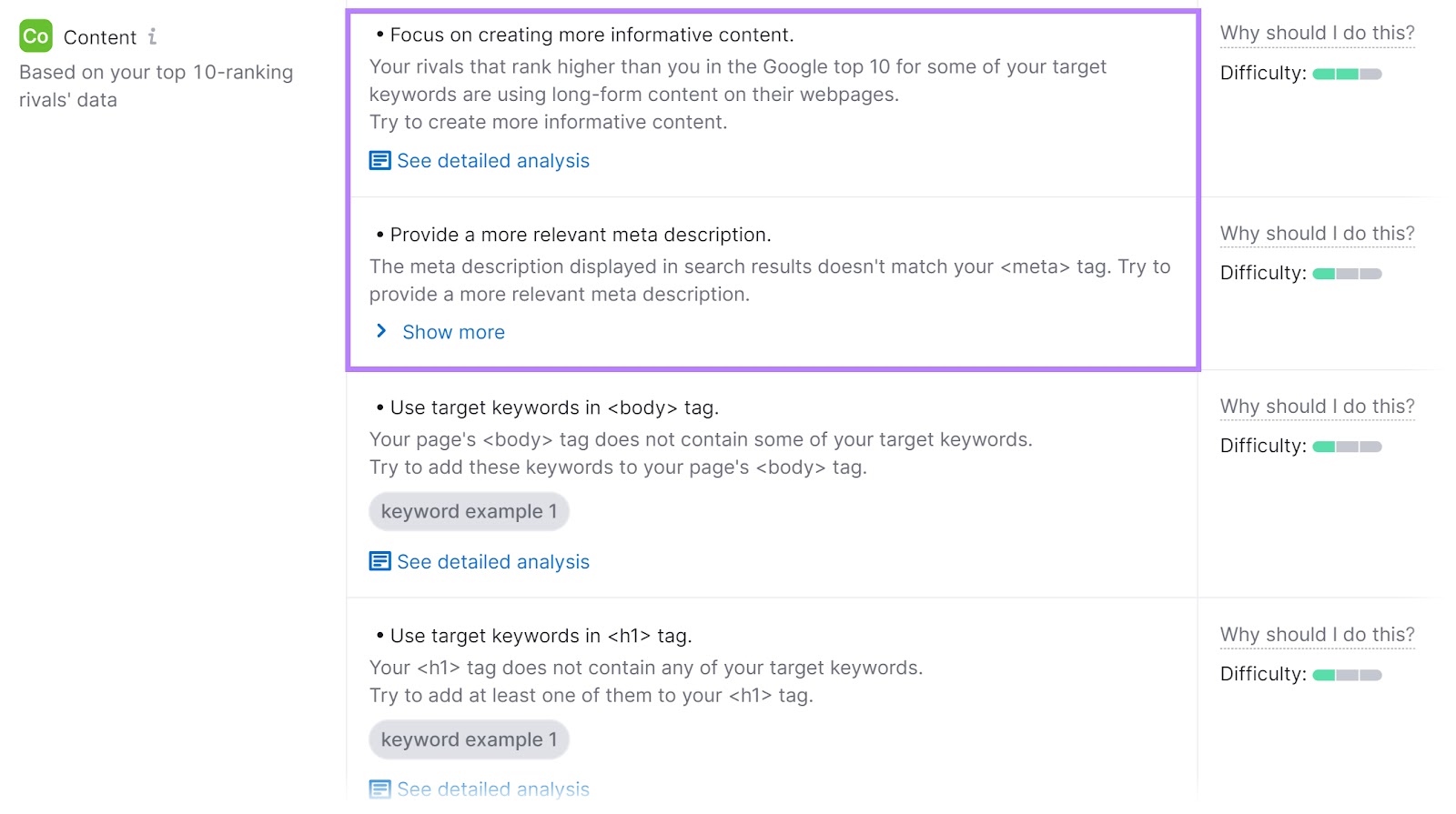

In the example below, the On Page SEO Checker suggests creating a more relevant meta description and more informative content, among other content optimization ideas.

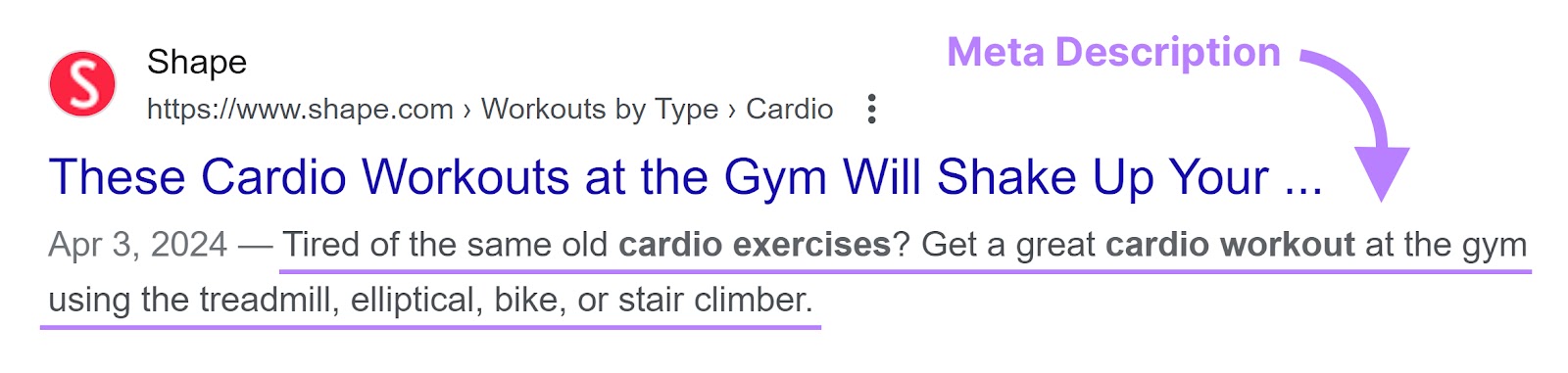

Start by creating a new version of the page’s meta description. This summary of the page will appear in search results. It should be compelling enough to convince searchers to click on your link.

Next, perform an A/B test to see how this new version compares to the previous. For example, you could check which version has the highest click-through rate over a given period of time.

2. Identify a Variable

Now that you know what to improve, choose one variable to test. This could be a headline, introduction, lead image, or another element.

Let’s assume your landing page has a low conversion rate despite getting steady traffic. A good starting point is to A/B test the CTA button since it can significantly impact conversions.

Or, you could use a heatmap tool like Hotjar or Crazy Egg to track visitor behavior in real time. This should give you an idea of which elements you should focus on.

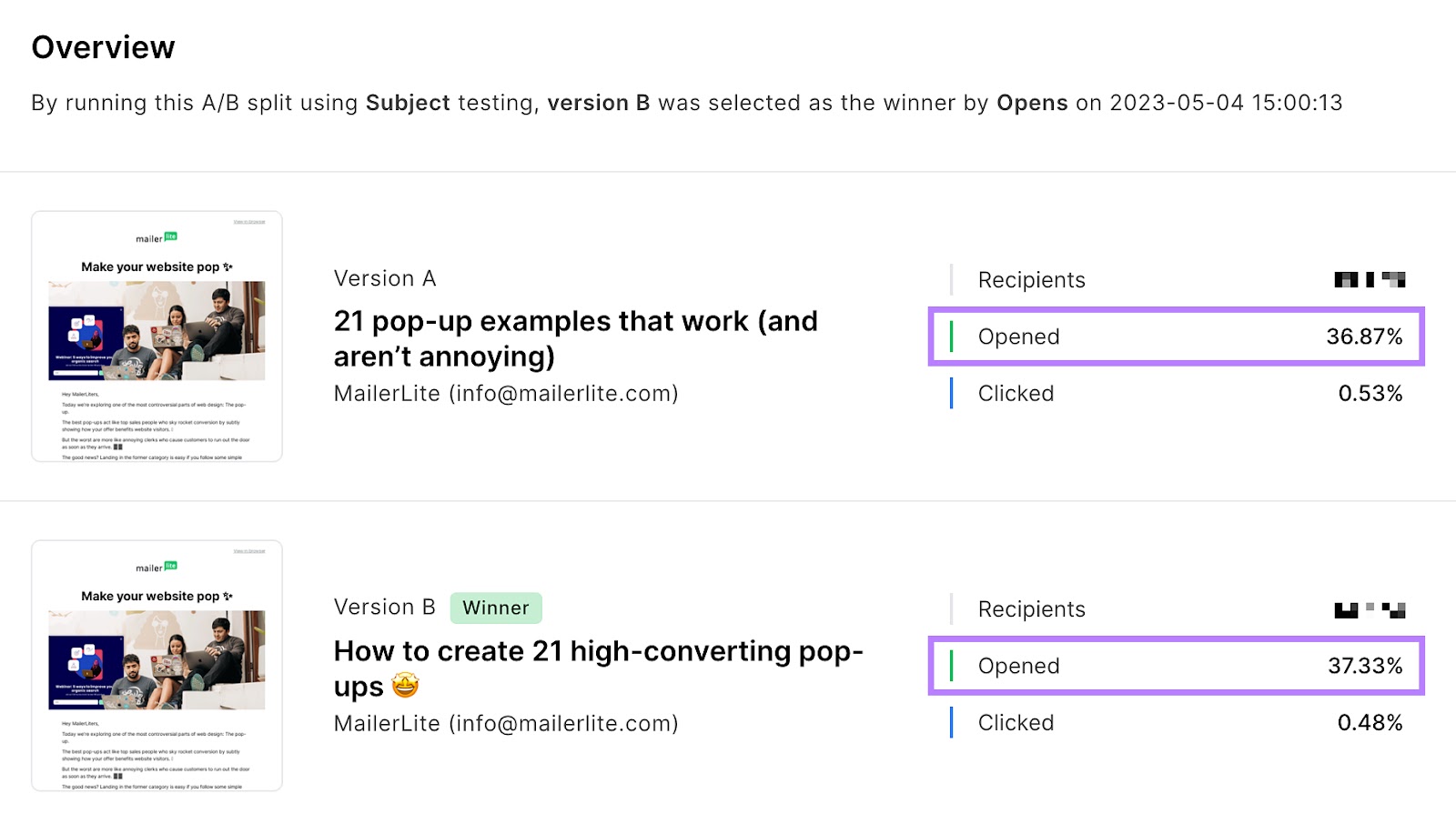

For instance, MailerLite tested two versions of an email subject line to judge its impact on open rate:

Image Source: MailerLite

Version B had a 37.33% open rate, whereas the original version had an open rate of 36.87%. So, they decided to use version B.

3. Settle on a Test Hypothesis

Next, determine how the A/B test results will fix the problem.

Also, consider how proving or disproving that hypothesis will benefit your business. That’s how you’ll know if the tests you’re about to conduct are worthwhile.

Take these steps to develop a strong hypothesis:

- Review your data to identify the problem

- Propose a solution (define the variable you want to change)

- Anticipate the potential outcome

- Determine how you’ll measure the results

For instance, let’s say your emails have a low open rate.

A hypothesis could be "Subject lines with emojis result in higher open rates." You’ll measure the results with Campaign Monitor, MailerLite, or whatever email marketing platform you use.

4. Set Your Goals, Test Period, and Sample Size

Measure several key performance indicators (KPIs) during your test. One or two are likely to matter more than others.

Choose a primary metric before you start. This will help you gauge success. Some examples include:

- Number of visitors to a web page

- Email open rates

- Click-through rates

- Newsletter sign-ups

- Subscriptions

- Bounce rate

For instance, if you’re A/B testing on-page SEO elements like page titles or meta descriptions, track the number of impressions and clicks. You could also measure the CTR.

You should also choose a test period to suit your objectives.

Longer tests generate more accurate data. But the sooner you have results, the sooner you can act on them—so balance is important.

One month is often a reasonable starting point.

If you’re testing changes to existing content, benchmark current performance (your current metric numbers) to create a baseline against which to measure the new version.

For some test elements, measure their performance based on time.

For example, check a blog post’s page views over the previous 30 days before implementing new changes. And recheck it 30 days later.

For other elements, tracking performance based on sample size is easier.

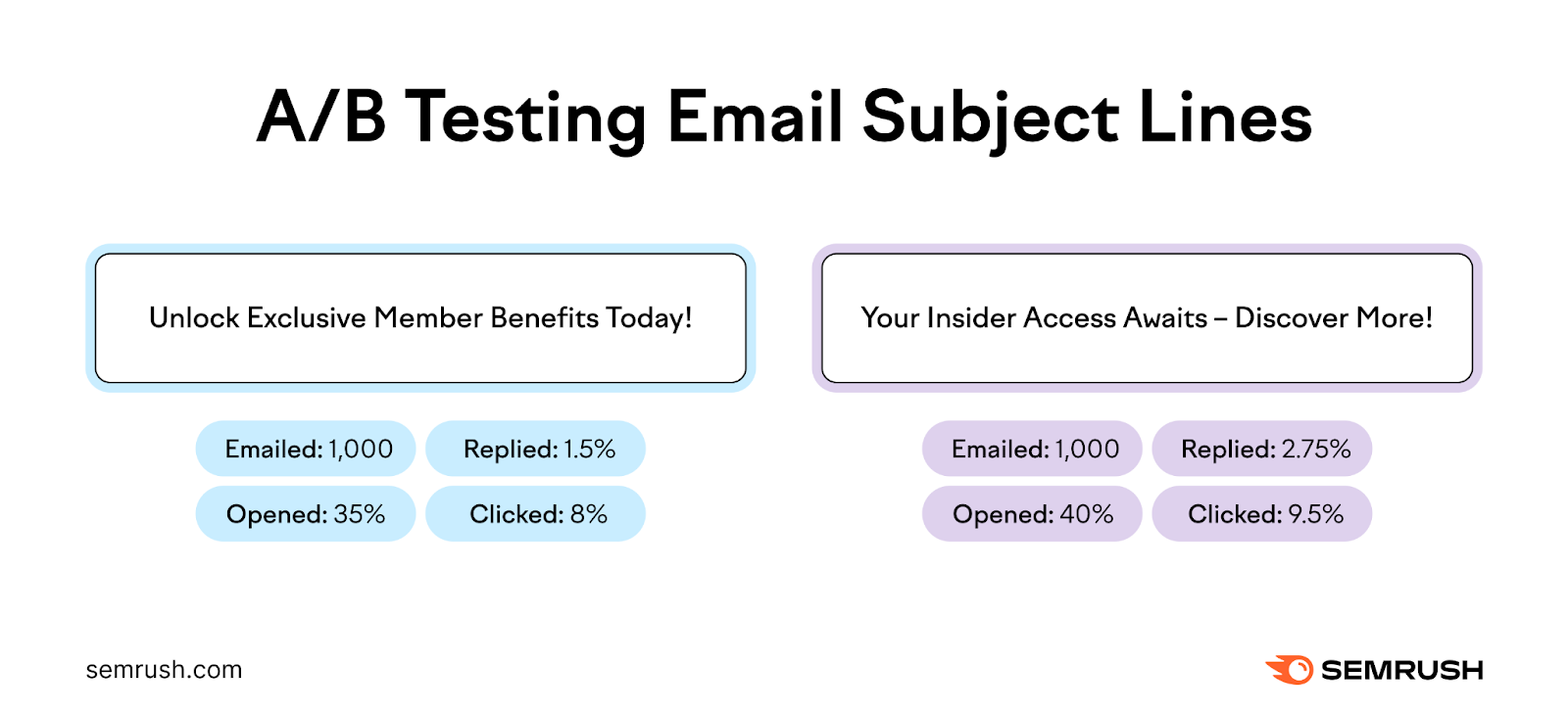

Say you’re testing email subject lines. You might compare open rates (your primary metric) for batches of 1,000 emails. That way, the impact of your changes should be clear and accurate.

Further reading: 14 Content Marketing Metrics to Track for Success

5. Create Variations Based on Your Hypothesis

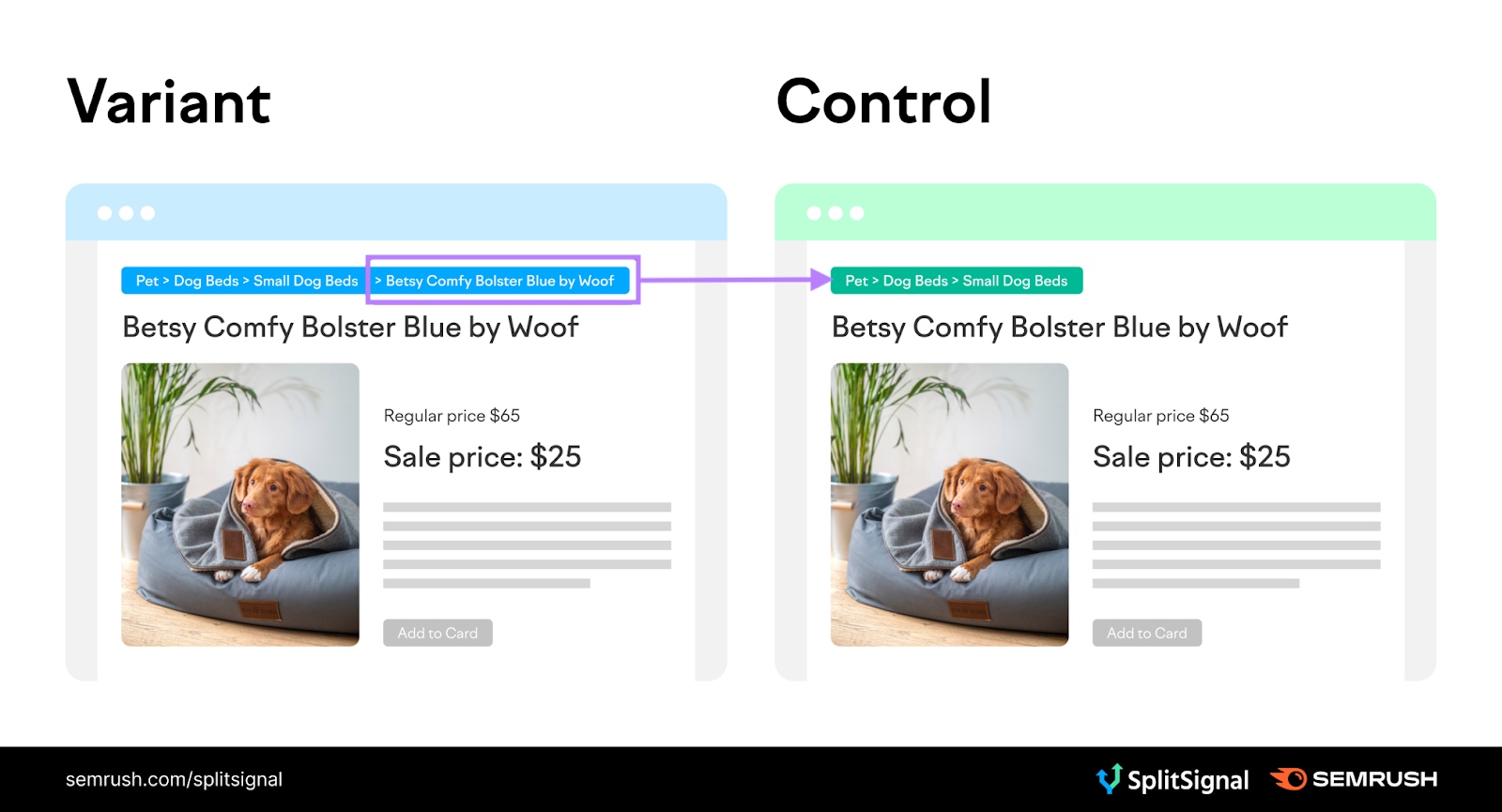

Set up your “control” and “challenger” versions.

The unaltered version is the control.

The challenger is the altered version that will compete with your control. If you test a minor variable (like heading length), copy the existing content and tweak the details as necessary.

For example, if you want to know whether adding contact information to a product page will help you generate more leads, set up (or find) a page without contact details. Then, create a new version with contact details for comparison.

Similarly, test CTA buttons in different sizes. Or, place them below the fold rather than above the fold (which is standard practice) on your site or mobile app to see if it changes anything.

6. Run Your Test

Implement a 302 redirect to your challenger page to see how your A/B test impacts SEO and content performance.

A 302 redirect tells Google that a web page was temporarily moved to a different URL. It also redirects traffic to the new page while maintaining the keyword rankings and link value of the original page.

Generally, it takes time for search engines to index pages. And it can take even longer to settle into the search engine results pages (SERPs). That’s why a temporary redirect to the challenger page is important.

At the end of your test period (e.g., one month)—or when you have enough data—compare the challenger page’s results to the control to see which one performed better.

If you decide to keep both web page versions during the test, use rel="canonical" links to tell search engines which version is the original. Otherwise, your website could get flagged for duplicate content.

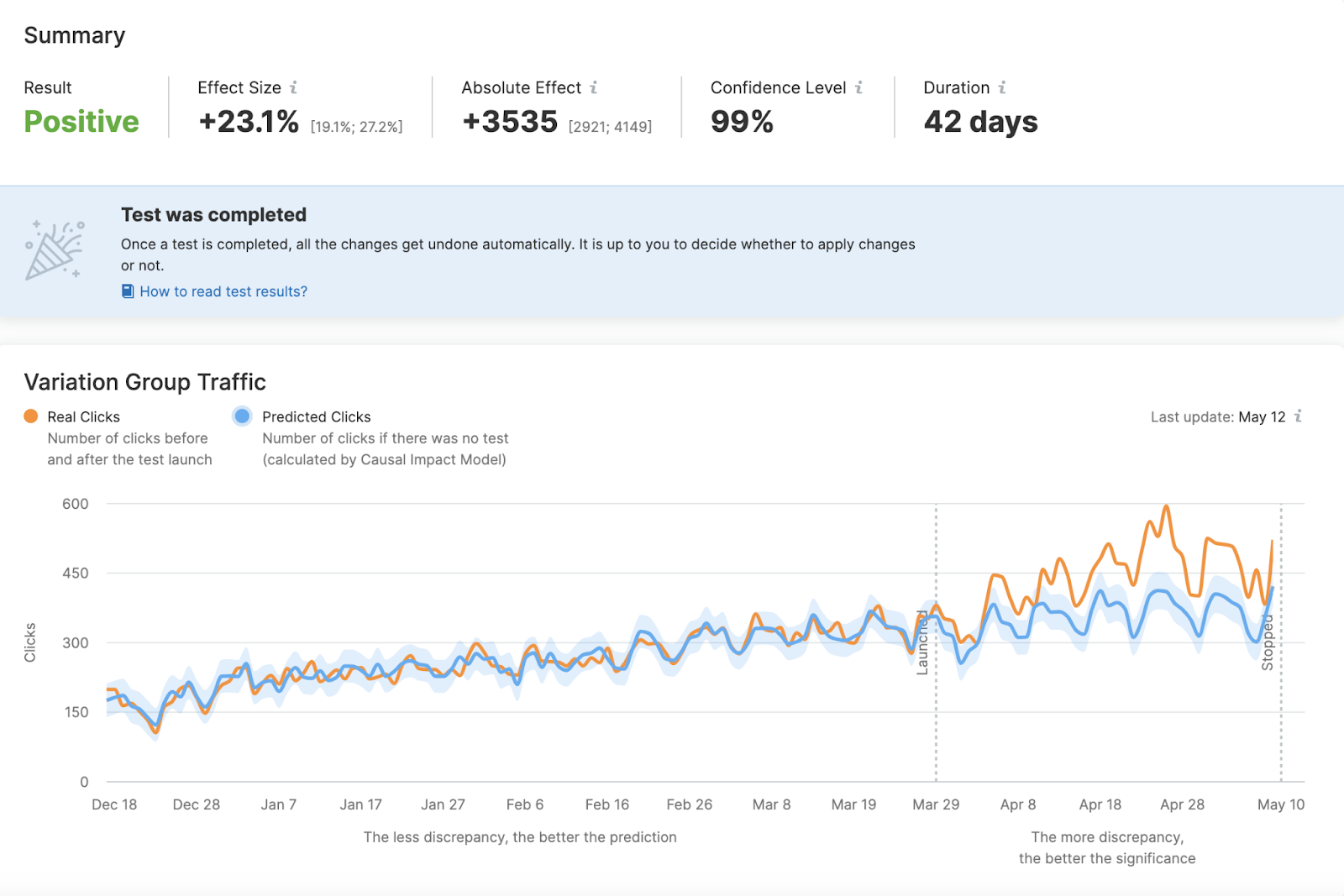

To simplify things, use Semrush's SplitSignal to run SEO A/B tests (even if you’re not tech-savvy).

Our user-friendly tool will show you the exact changes that impact website traffic so you can fine-tune your copy, meta tags, page titles, and other elements.

It offers a streamlined dashboard for easy setup and management of A/B tests. The tool also provides data-driven insights with detailed analytics and real-time results, helping you quickly implement, track, and optimize your strategies.

7. Analyze the Results and Plot Your Next Steps

Carefully analyze your metrics. Consider how they influence not just immediate goals, but also broader objectives. Like revenue growth and customer engagement. Then, choose the winning version based on a comprehensive analysis of this data.

What if there’s no difference between variations?

Don't be discouraged—this outcome still provides valuable insights. Consider revisiting your hypotheses and experiment design. Create new variants with different elements or strategies. And conduct further tests to refine your approach.

What if your experiment disproves your hypothesis?

Embrace this outcome. Remember, if every hypothesis is confirmed, you might not be exploring new avenues or challenging your assumptions adequately. Each null hypothesis is a chance to gain actionable insights about your audience's preferences and behaviors.

Use these insights to continually improve your strategies. Document your findings, analyze patterns, and apply these lessons to future tests. This ensures your approach remains data-driven and focused on achieving your long-term objectives.

Examples of What Elements to A/B Test

With A/B testing, marketing professionals can look at dozens of variables that impact their campaigns' success. These include but are not limited to:

- Headlines and subheadlines

- Website copy

- Email copy

- Email subject lines

- Product page layouts

- Button styles

- Subscription forms

- Social proof

Here are some of the most impactful tests to run.

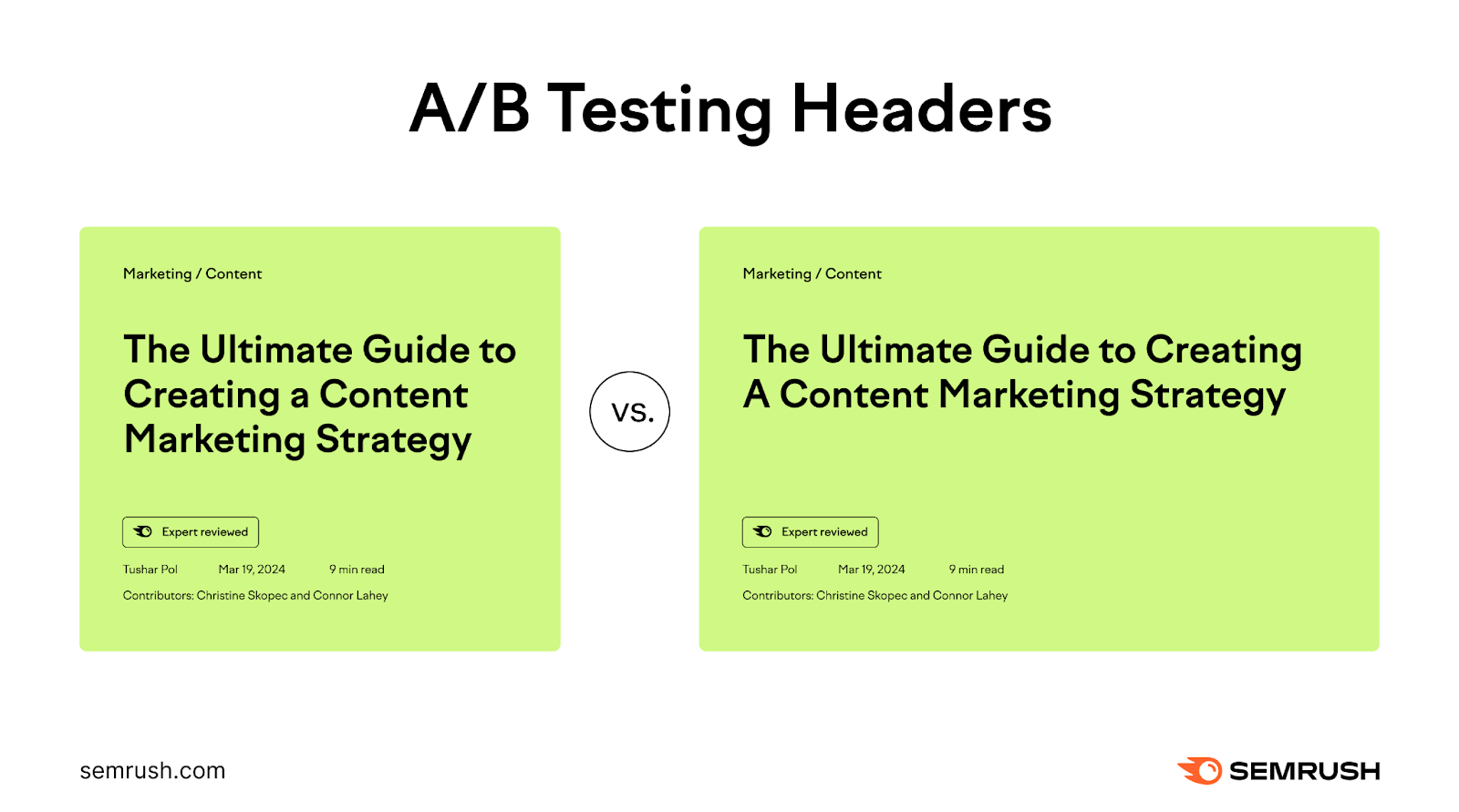

Headlines

The headline is the first thing visitors see on your webpage. That alone makes it a worthy A/B testing subject.

Use A/B testing to optimize your headlines for social media, articles, and other content types.

For instance, implement the following changes to see how they impact click-throughs, website traffic, or engagement levels:

- Shorter vs. longer headlines

- Headlines with/without numbers, emojis, or special symbols

- Headlines focused on benefits vs. features

- Headline position (e.g., left vs. right)

You can also use different font sizes and styles for your headlines or vary your tone of voice. Sometimes, minor tweaks—like changing a single word—can have a significant impact.

Further reading: How to Write SEO Headlines That Rank (+ 9 Powerful Templates)

Call to Action

A great CTA can be the difference between someone converting (e.g., purchasing your product or subscribing to your email newsletter) or visiting your competitors.

A/B testing allows you to experiment with various CTA elements, including:

- Button size

- Placement

- Copy

- Design

- Font

- Color

For example, a bigger CTA button could garner more clicks because it's more visible.

Similarly, the color red creates a sense of urgency and grabs attention. Test it for your CTA buttons to see if it improves click rates.

Further reading: 30 Attention-Grabbing Call-to-Action Examples

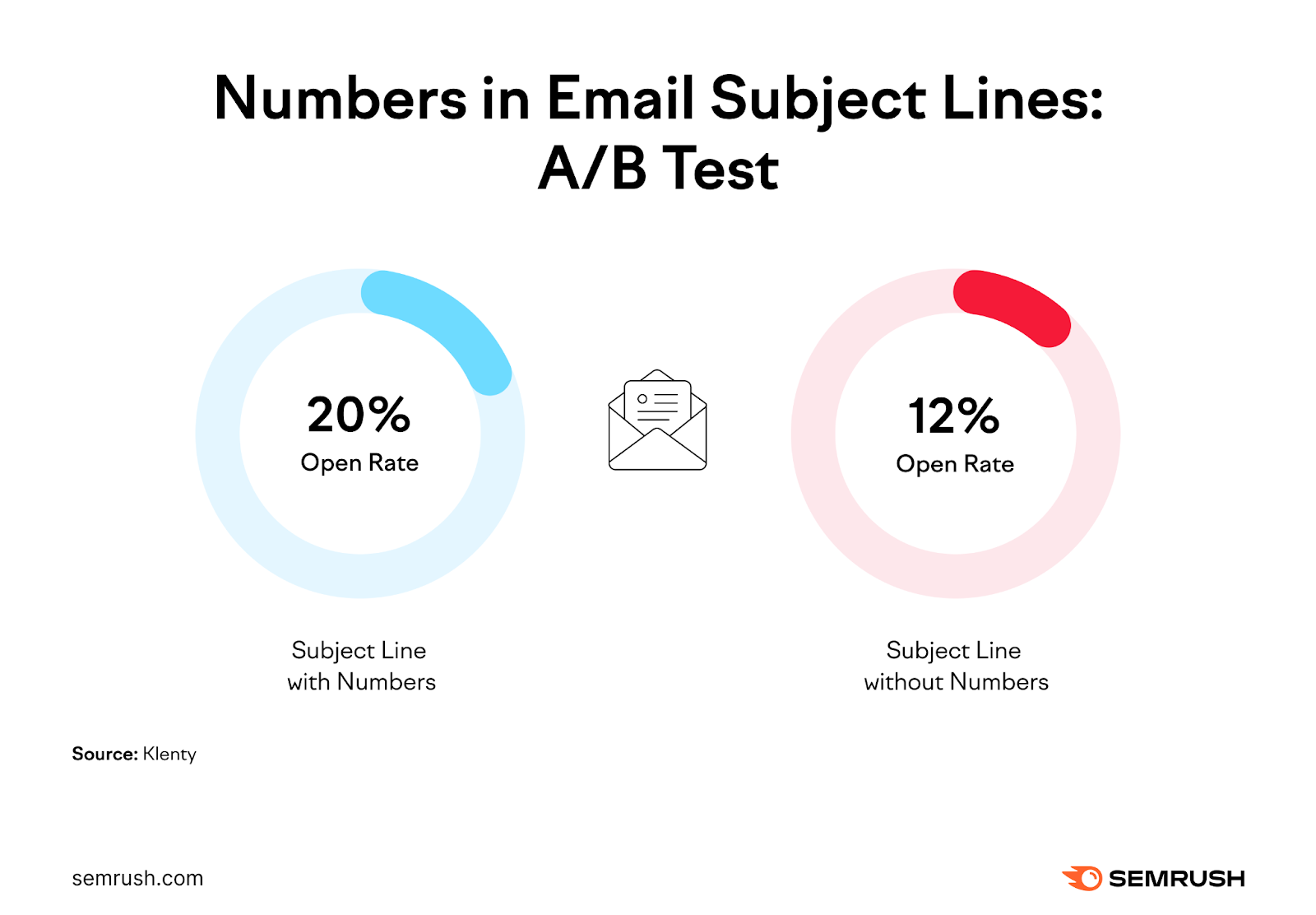

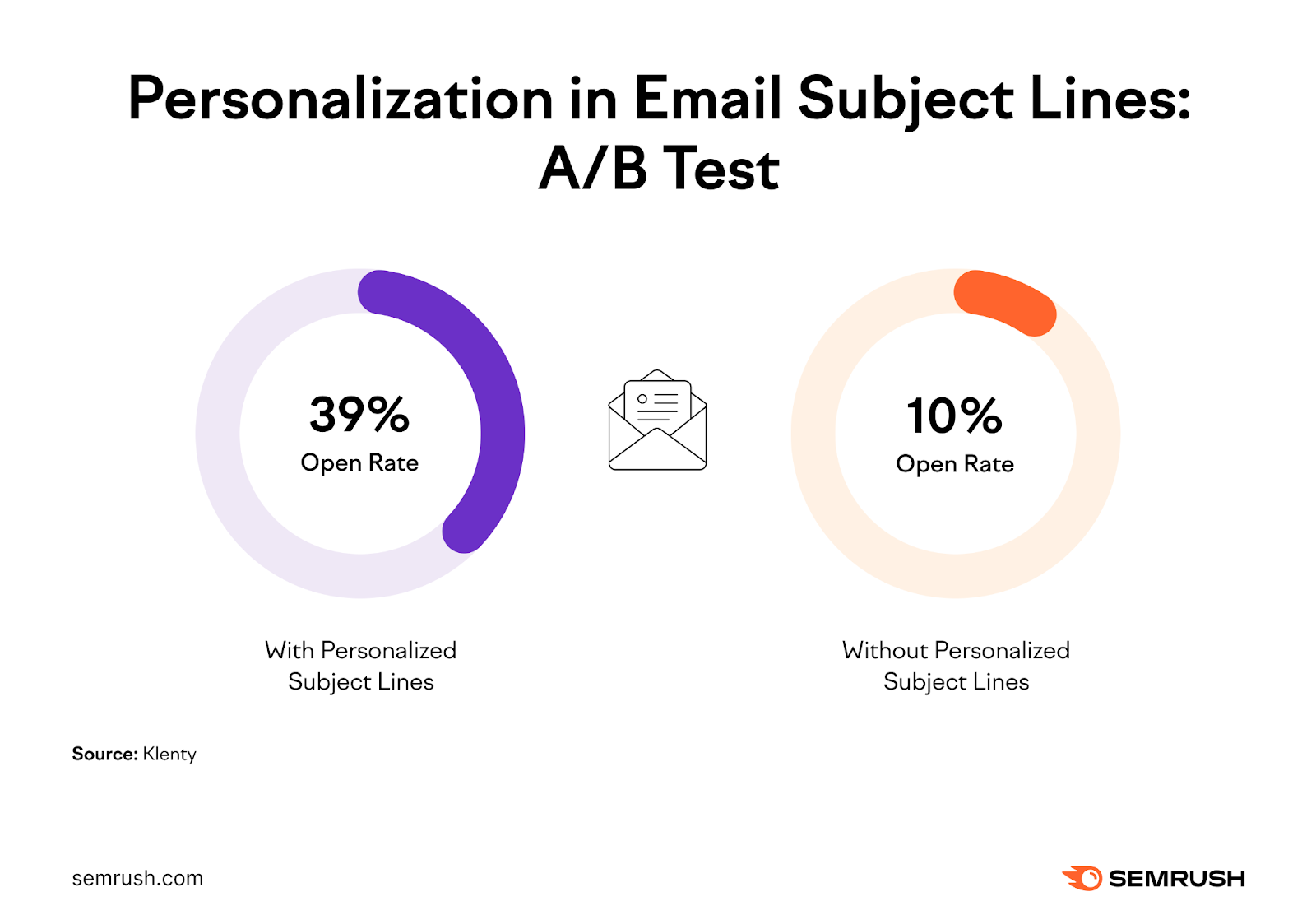

Email Subject Lines

Including numbers in subject lines can boost email open rates, according to a Klenty study.

Emails with numbers in the subject line had an average open rate of 20%. By comparison, those without numbers had a 12% open rate.

Klenty also found that including the recipient’s name in the subject line can result in a 39% open rate. Emails with generic subject lines had an open rate of only 10%.

With A/B testing, you can compare different subject line versions to determine the highest performer. Here are some testing ideas:

- Add numbers (where relevant)

- Include the recipient's name

- Ask questions

- Create a sense of urgency

- Eliminate words that trigger spam filters (e.g., Earn extra, Free offer, Cash bonus)

- Include a forward ("Fwd:") or reply ("Re:")

Layout and Navigation

The best way to smooth conversion pathways for your users? Put the most appealing and helpful content right in front of them.

Two pages could have the same content. However, if one looks cluttered or emphasizes the least valuable information, it will engage fewer visitors.

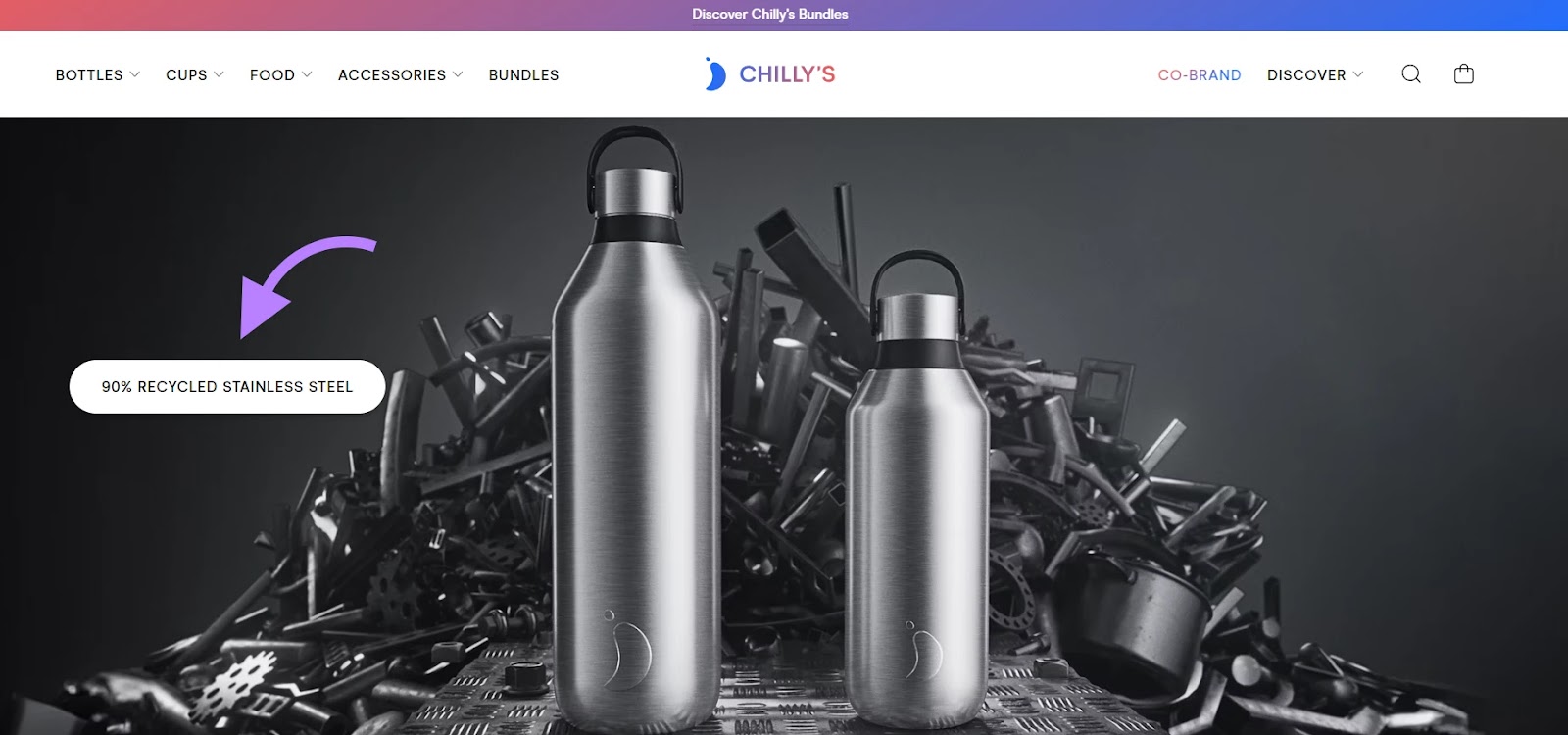

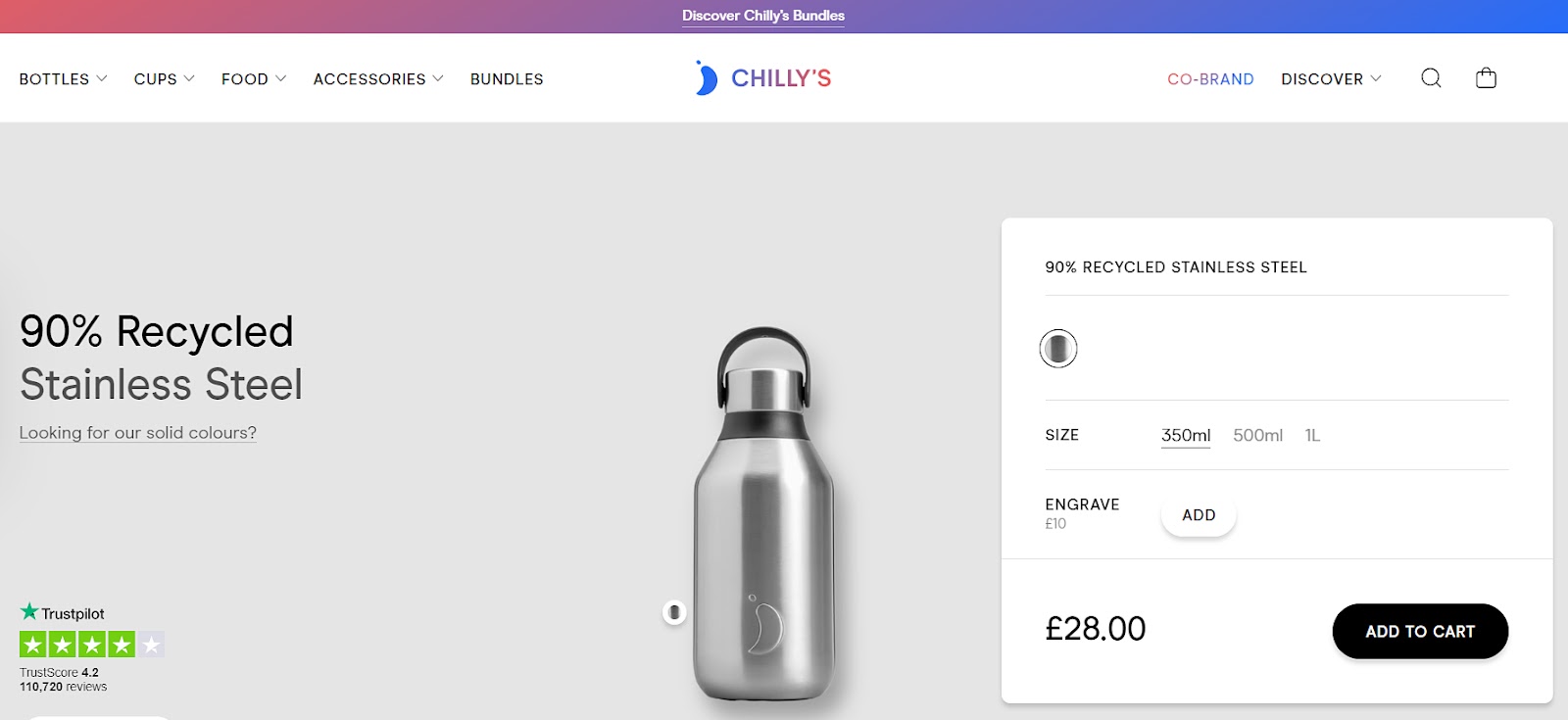

For example, Chilly’s homepage opens with a photo gallery that grabs attention instantly.

The first photo showcases two reusable bottles with a label telling visitors the bottles are made from 90% recycled stainless steel.

When someone clicks the image, Chilly’s takes them directly to the product page. So, instead of browsing the site to find that particular bottle type, customers can access it with just one click.

If Chilly’s placed this homepage slider further down or didn't use it, visitors might have left before seeing the link or navigated elsewhere on the website.

Use A/B tests to experiment with different site elements. These may include the navigation menu, buttons, forms, images, and more.

Further reading: A Beginner’s Guide to A/B Testing Landing Pages

Social Proof

Consumers make decisions based on other people's actions and recommendations. This is social proof.

For example, consulting firm Gartner found that 86% of businesses consider verified reviews "important" or "very important" when shopping for software products.

In another survey, 61% of consumers said they were likely to trust recommendations from friends, relatives, or social media influencers. And 38% admitted they trust recommendations from a brand on social networks.

A/B test the customer reviews, testimonials, case studies, and other social proof used in your marketing strategy.

For example, compare two versions of a sales email—one with customer reviews and another featuring a case study. Determine which variant produces higher CTR rates, conversions, or your preferred metrics.

Best Practices for Effective A/B Testing

Now that you know how to design an A/B test, follow these practices to gain actionable insights.

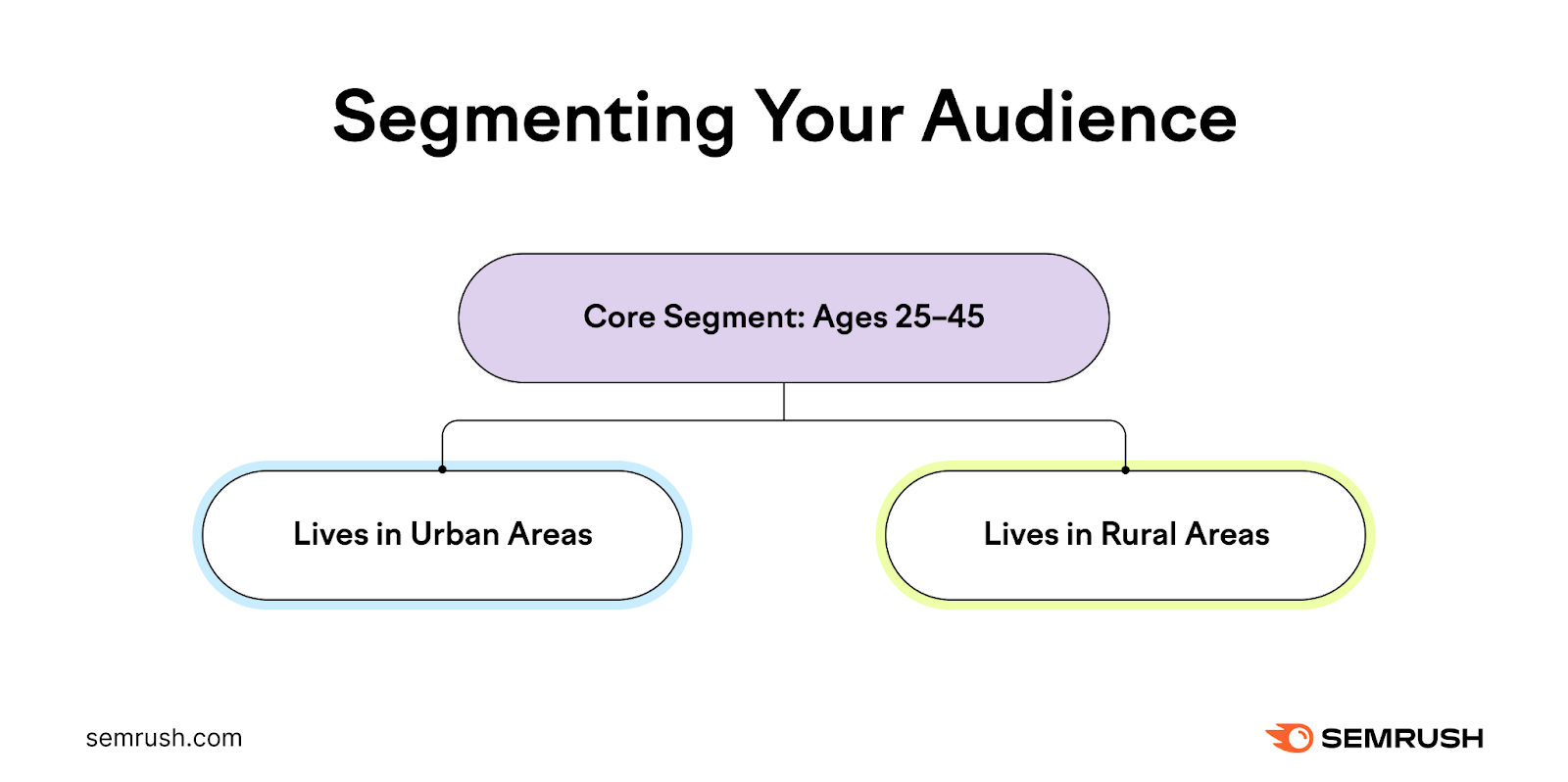

Segment Your Audience Appropriately

Create relevant audience segments based on what you want to test. Choose a representative sample—such as men or women—and break it down further into smaller segments, like:

- Mobile users vs. desktop users

- New vs. returning visitors

- College students vs. young professionals

You can also segment your audience by age, location, traffic source, or other characteristics. Run several A/B tests to gather data for different groups. Then, tailor your marketing efforts accordingly.

Select an adequate sample size for statistically significant results. Consider the control group's current conversion rate (baseline conversion rate) and the minimum detectable effect. The latter represents the minimum improvement expected after implementing version B.

Next, use an A/B sample size calculator to determine the number of participants needed for an effective test.

For example, let’s say you’re running an ecommerce website, and you want to optimize the product page to increase conversions. You've decided to A/B test the page title.

Your current conversion rate is 20% ( the baseline conversion rate), and you’re looking for a 5% increase (the minimum detectable effect). Using the sample size calculator, you determine you need a sample size of 1,030 visitors (515 in each group) for an effective test.

This approach can give you valuable insights into how different groups engage with your content. For example, you'll discover which variations of your content, titles, or CTA buttons perform better for specific segments.

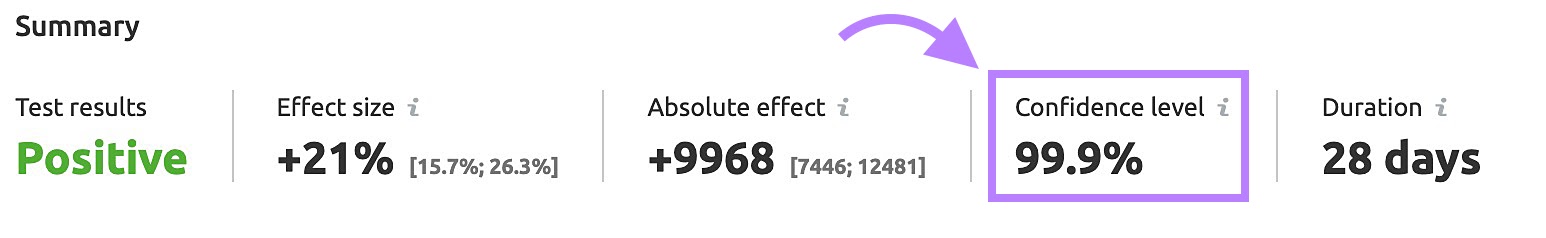

Ensure Statistical Significance

Statistical significance means that your test results didn't occur by chance or due to sampling errors. Instead, they have a specific cause, such as the variation being tested or consumers' preferences for a particular feature.

A high statistical significance level ensures more accurate results. But even if this value reaches 95% or more, you should still conduct further tests to confirm and validate your findings.

Ideally, use a dedicated A/B testing tool to measure statistical significance.

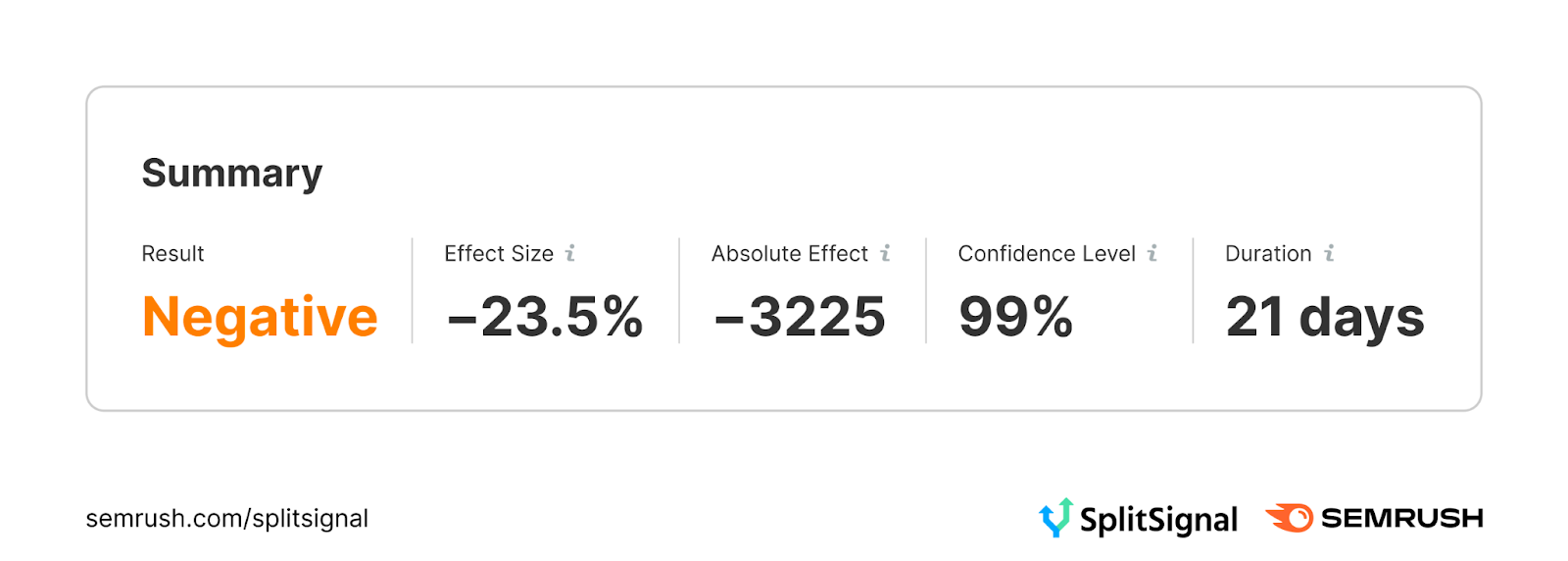

For instance, Semrush’s SplitSignal can automatically calculate statistical significance. Our A/B testing software displays this value as “Confidence level.”

Test Only One Variable at a Time

Test a single variable at a time, such as the subscription button color or size, so you can accurately determine the impact of each change.

If you test multiple variables simultaneously, you won't know which change impacted the results.

But, as mentioned earlier, you can run multivariate tests to compare multiple modified variables (e.g., subscription button color, size, and copy).

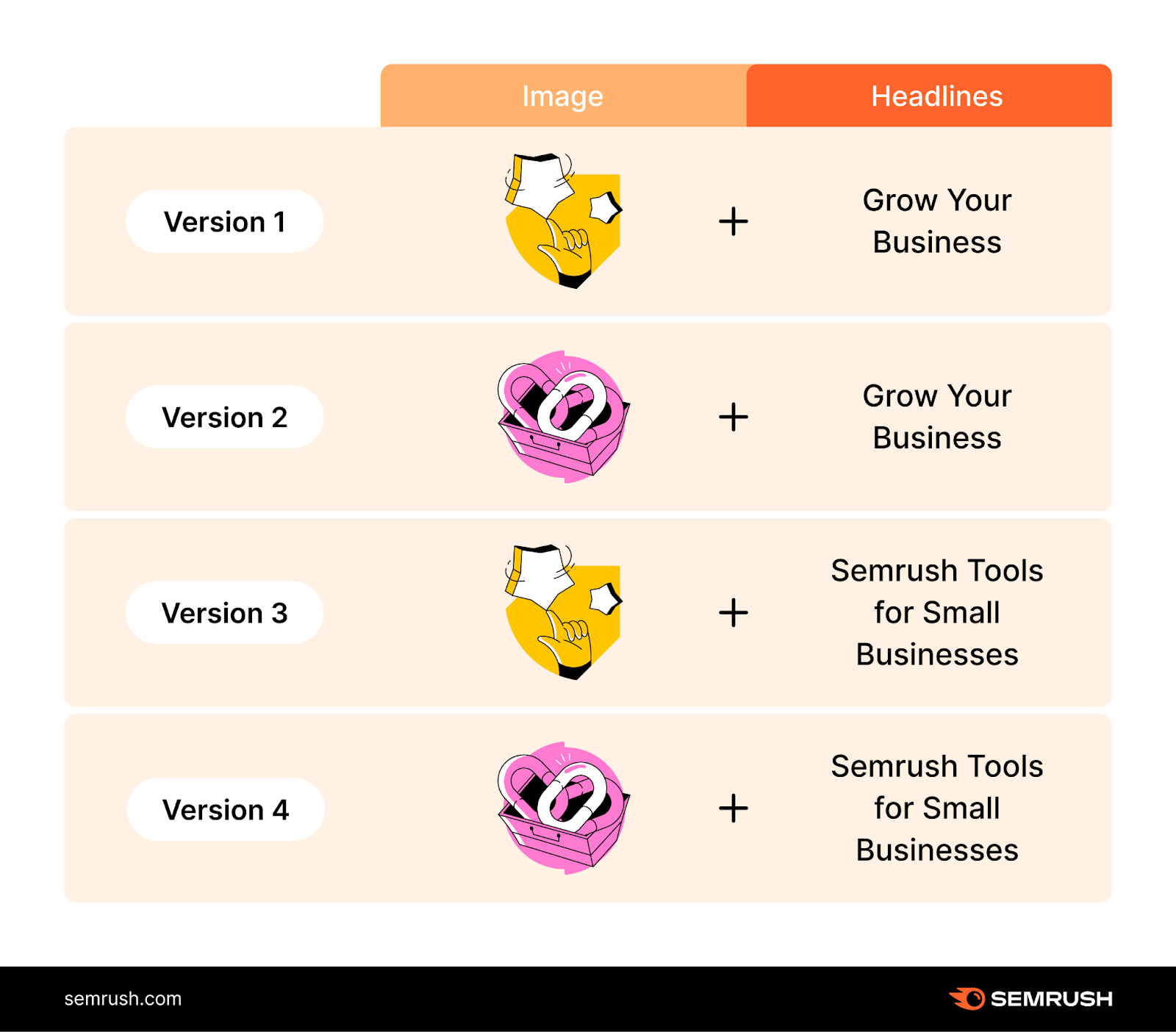

Say you want to measure the impact of the above-the-fold elements of a web page on conversions.

You could simultaneously test two landing page headlines and two header images. That means you’d create four test pages, each with a unique combination of elements, as shown below:

Multivariate testing eliminates the need to run multiple A/B tests. A potential downside is that you'll need a large sample size for reliable results. So, if your website is new or has little traffic, you may be unable to gather enough data.

Common A/B Testing Mistakes

A/B testing can be more or less straightforward, depending on your business model and conversion goals. But even small mistakes, such as split-testing too soon, can impact the results.

Here are some common pitfalls to avoid during the testing process.

Testing Too Many Variables Simultaneously

Split-testing too many changes at once can create confusion and make it harder to gain meaningful insights. You won't know which element or variation is responsible for the results.

Did your email open rate increase because you modified the subject line? Or, was it because you changed your CTA's placement?

You may also notice some metrics improve and worsen when testing multiple variables simultaneously. But you won't know which changes caused those metrics to decline.

Not Giving Your Tests Enough Time to Run

This common mistake can prevent you from achieving statistical significance. You won't have enough time to collect data, which can affect the test results.

The optimal test duration depends on your chosen variants and the intended outcome. As mentioned earlier, one month should be enough time to see tangible results, but this time frame may vary.

Let's assume you compare two subject line versions and their impact on email open rates. In this case, you could run the test for just a few days.

But if you're comparing two web page variants and want to measure organic traffic, run the test for several weeks. As discussed earlier, Google and other search engines need time to index new pages.

Generally, it's best to run an A/B test until you achieve a confidence level of 95% or higher.

Ignoring the Impact of External Factors

A/B testing results depend on internal and external factors, of which the latter is out of your control. Ignoring these factors can lead to false positives or false negatives.

Imagine you run an online gift store and start an A/B test in November. You may compare two web page versions to see which brings in the most traffic.

But, with the holidays around the corner, consumers are more likely to visit your site and buy gifts. The increased seasonal traffic can skew your results.

Here are some other external factors that could interfere with your test:

- Time of day

- Social or economic factors

- Seasonal trends

- Regulatory changes

- Site outages

One solution could be to run your test for longer to collect more accurate data. That should give you a more balanced, realistic picture.

Overlooking the User Experience

Many marketers overlook the user experience and focus solely on metrics like CTRs or conversions when running A/B tests. This approach can lead to misleading results and hurt business performance.

Let’s say you start using clickbait headlines based on the test results. These often grab the reader's attention, which may increase website traffic and clicks.

But if the page content doesn't deliver on the headline's promises, it can negatively impact the user experience.

The result? Dissatisfied customers, lower engagement, and high bounce rates.

Consider both your short- and long-term goals when running A/B tests. Consider the practical business implications instead of focusing solely on numbers.

Further reading: UX and SEO: The Best Strategy for Getting Results

Leverage A/B Testing Insights for Continuous Improvement

A/B testing can provide the insights you need to optimize your website, sales funnel, email campaigns, and online ads. When done right, it can lead to a better user experience, higher click-through rates, and increased engagement.

If you're new to this method, test the elements most likely to boost conversions. Some examples are your CTAs, website copy, and subject lines.

Semrush's SplitSignal can make your job easier.

Use the tool to design SEO A/B tests, measure the results in real time, and fine-tune your testing strategy. And before you make any permanent changes to your site, preview the results to ensure they won’t harm your traffic.

Remember to try our other tools to streamline A/B testing.

Start with the On Page SEO Checker to identify areas for site improvement. Then, leverage these insights to determine which variables to test for higher traffic.